Post originally published on Medium

For the past couple of years, I’ve had a growing interest in neurotechnology. This blog post is about sharing the things I’ve learnt along the way and hopefully help people who want to get started!

Before diving into the topic, I thought I would briefly talk about how I got into it in the first place.

Back story

— -

I don’t have a background in Computer Science (I studied advertising and marketing), so I learnt to code by doing an immersive coding bootcamp at General Assembly.

While I was looking for my 1st job, I started tinkering with JavaScript and hardware and the 1st project I ever worked on was to control a Sphero robotic ball using the movements of my hand over a Leap Motion.

It was the first time I used JavaScript to control things outside of the browser and I was instantly hooked!

Since then, I’ve spent a lot of my personal time prototyping interactive projects and every time, I try to challenge myself a little bit more to learn something new.

After experimenting with a few different devices, I was looking for my next challenge and this is when I came across my first brain sensor, the Neurosky.

First experiments with a brain sensor

— -

When I started getting interested in experimenting with brain sensors, I decided to start by buying a Neurosky because it was a lot less expensive than other options.

I didn’t really know if I would have the skills to program anything for it (I had just finished my coding bootcamp), so I didn’t want to waste too much money. Luckily, there was already a JavaScript framework built for the Neurosky so I could get started pretty easily. I worked on using my level of focus to control a Sphero and a Parrot AR drone.

I quickly realised that this brain sensor wasn’t super accurate. It only has 3 sensors, so it gives you your level of “attention” and “mediation” but in a pretty irregular way. They also give you access to the raw data coming from each sensor, so you can build things like a visualizer, but 3 sensors is really not enough to draw any kind of conclusion about what is happening in your brain.

As I was doing some research about other brain sensors available, I came across the Emotiv Epoc. It looked like it had more features so I decided to buy it so I could keep experimenting with BCIs.

Before explaining how this headset works, let’s talk briefly about the brain.

How does the brain work

— -

I am definitely not an expert in neuroscience so my explanation is going to be incomplete but there are a few basic things you need to know if you want to have a better understanding of brain sensors and neurotechnology.

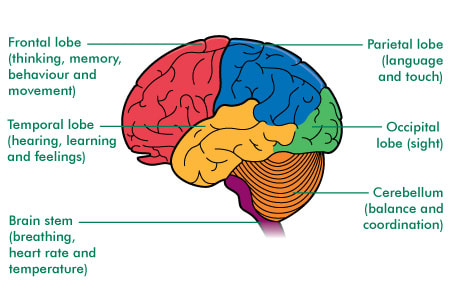

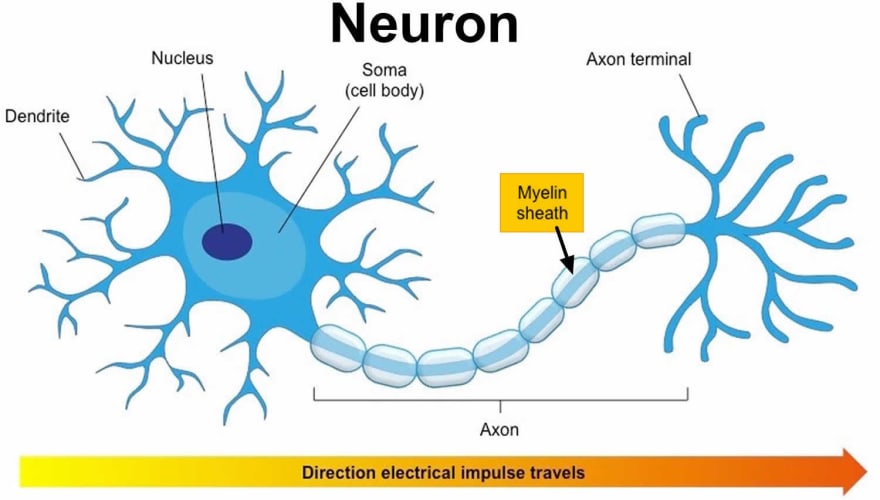

The brain is made of billions and billions of neurons. These neurons are specialised cells that process information and instead of being randomly spread out, we know that the brain is organised in different parts responsible for different physiological functions.

For example, let’s take: moving.

In the brain, parts responsible for movement and coordination include the primary motor cortex (in the frontal lobe) and the Cerebellum. When coordinating movements, neurons in these parts are triggered and send their axons down the spinal cord. They then trigger motor neurons that activate muscles and result in movements.

As I said before, this is a very simplistic explanation but the most important thing is that these electrical signals fired can actually be tracked by an EEG (Electroencephalography) device on the surface of the scalp.

Other systems can be used to track the activity of the brain, but they are usually a lot more invasive, expensive and require surgery. For example, you also have ECog (Electrocorticography) where implants are placed inside the skull.

Hopefully this made sense and we can now spend some time talking about how the Emotiv Epoc tracks these electrical signals.

How does a brain sensor work

— -

Emotiv has 3 different devices available:

- The Emotiv Insight

- The Epoc Flex

- The Emotiv Epoc

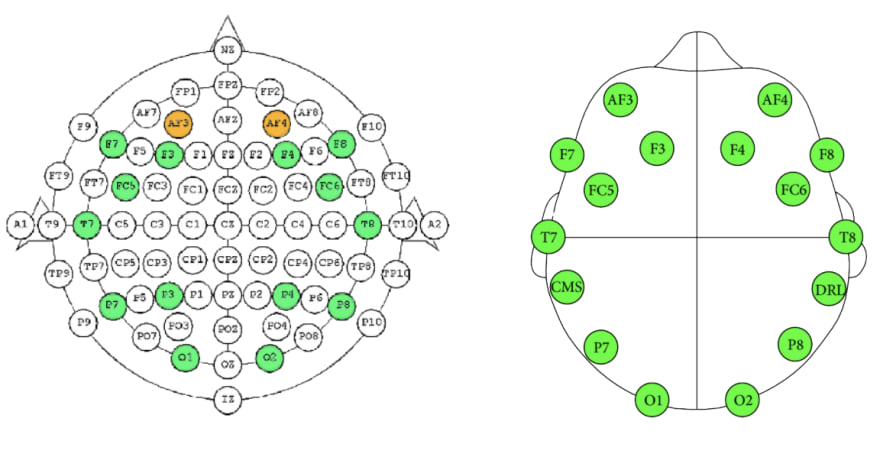

The Epoc has 14 sensors (also called channels) placed all around the head.

The 10/20 international EEG system (on the left below), is used as a reference to describe and apply the location of scalp electrodes. It is based on the relationship between the location of an electrode and the underlying area of the brain. This way, it allows a certain standard across devices and scientific experiments.

In green and orange, you can see which sensors are used on the Epoc (on the right).

As as you can see, even if 14 channels may sound like a lot, it’s actually way less than the amount of sensors on a medical device, however, they seem to be distributed pretty well around the head.

The Epoc has a sampling rate of 2048 internal downsampled to 128 SPS or 256 SPS and the frequency response is between 0.16 to 43 Hz.

What this means is that it gets 2048 samples per second taken from a continuous signal where the frequency of the response varies from 0.16Hz to 43Hz.

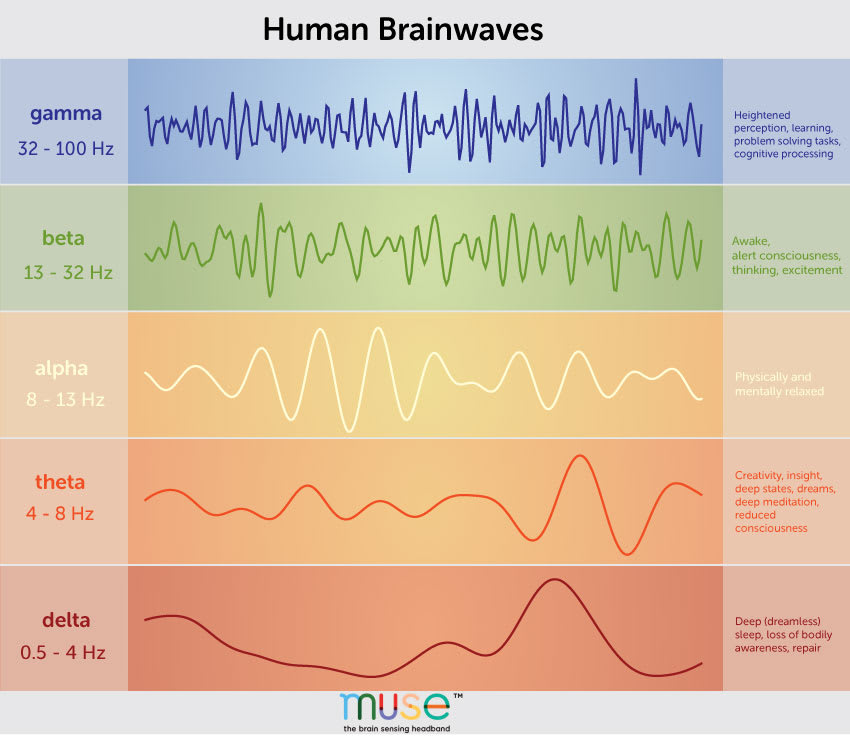

If we look at the different types of brain waves, we can see that they operate between 0.5Hz and 100Hz.

Why is this important? Because depending on the type of application we want to build with our device, we might want to focus only on certain waves operating on specific frequencies. For example, if we want to build a meditation app, we might want to only focus on the theta waves, operating between 4–8Hz.

Now that we know how the device works, let’s talk about what it allows you to track.

Features

— -

The Emotiv software is not open-source so you don’t have access to the raw data from each sensor. Instead, they give you access to:

- Accelerometer and gyroscope axis.

- Performance metrics (level of excitement, engagement, relaxation, interest, stress and focus)

- Facial expressions (blink, wink left and right, surprise, frown, smile, clench, laugh, smirk)

- Mental commands (push, pull, lift, drop, left, right, rotate left, rotate right, rotate clockwise, rotate counter clockwise, rotate reverse, disappear)

Only the mental commands require training from each user. To train these “thoughts”, you have to download their software.

Once you’ve done some training, a file is saved either locally or in the cloud.

If you want to write your own program, you can use their Cortex API, their community SDK (they stopped maintaining it after v3.5) or, if you want to use JavaScript, you can use the framework I’ve worked on, epoc.js.

Epoc.js

— -

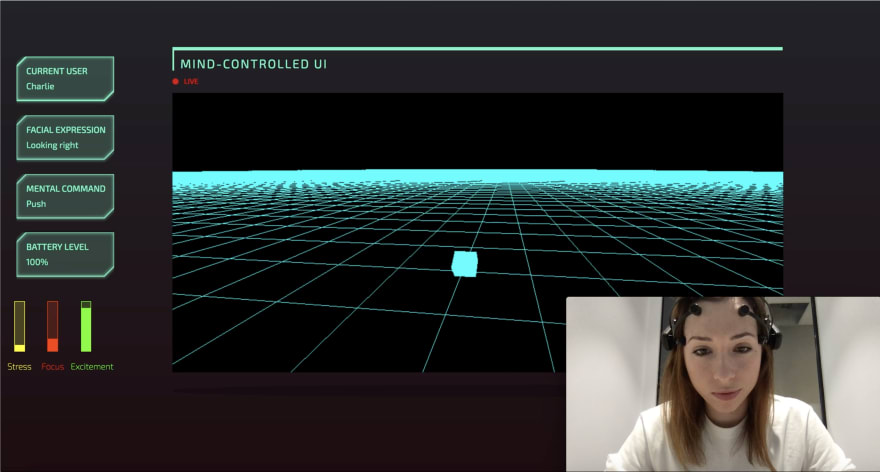

Epoc.js is a framework to interact with the Emotiv Epoc and Insight in JavaScript. It gives you access to the same features mentioned above (accelerometer/gyroscope data, performance metrics, facial expressions and mental commands), as well as allowing you to interact with the emulator.

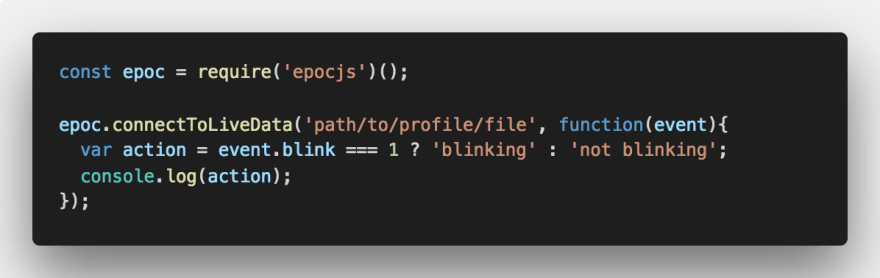

You only need a few lines of code to get started:

In the code sample above, we start by requiring and instantiating the node module. We then call the method connectToLiveData and pass it the path to the user file that is saved after training. We get a callback with an object containing the different properties we can track. For example, if we want to track if the user is blinking or not, we use event.blink.

Each property comes back either as a 0 if it is not activated or 1 if it is activated.

The full list of properties available can be found on the README of the repository.

In the background, this framework was built using the Emotiv C++ SDK, Node.js and 3 node modules: Node-gyp, Bindings and Nan.

This is the old way of creating a node-addon so if you are interested in learning more about that, I’d recommend looking into N-API.

So, now that we talked about the different features and how to get started, here’s a few prototypes I built so far.

Prototypes

— -

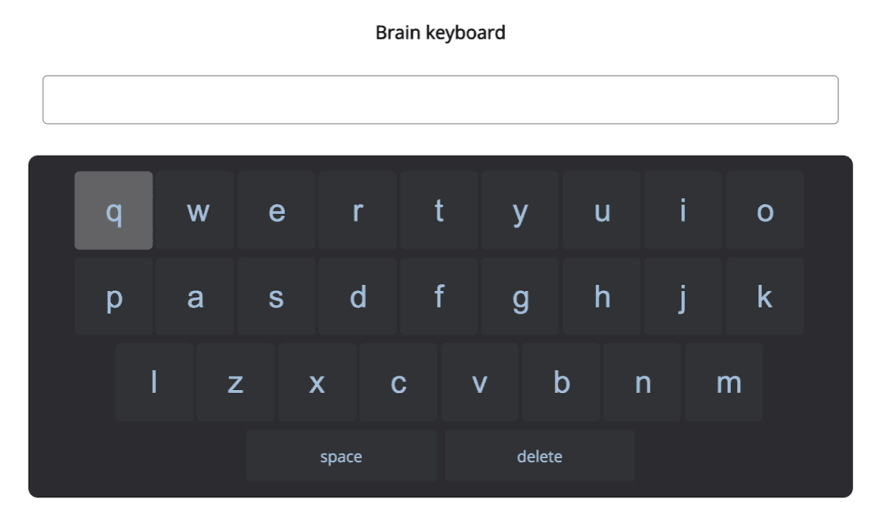

Brain keyboard

The first demo I ever built with the Emotiv Epoc was a brain keyboard. The goal was to see if I could build a quick interface to allow people to communicate using facial expressions.

Using eye movements, looking right or left highlights the letter on the right/left, and blinking selects the letter and displays it in the input field.

It’s a very simple-looking prototype but it works!!

WebVR

My 2nd prototype involves the mental commands. I wanted to see if I could navigate a 3D space only using thoughts.

In this prototype, I used Three.js to create the basic 3D scene, epoc.js to track the mental commands and web sockets to send them from the server to the front-end.

IoT

My 3rd prototype is about controlling hardware in JavaScript. This is something I’ve been tinkering with for a few years so I was excited to build a quick project to control a Parrot mini drone using thoughts!

All these prototypes are pretty small. The main goal was to validate some ideas and learn about the possibilities and limits of such technology, so let’s start by talking about the limits.

Limits

— -

As exciting as this technology seems, there are still quite a few important limits.

Training

The fact that each user has to go through training sessions to record brain waves and match them to particular commands is expected but a barrier to adoption for most people. Unless an application solves a real need and the accuracy of the device is really good, I cannot imagine people spending time training a brain sensor.

Latency

When building my prototype using mental commands, I realised that there was a bit of a delay between the moment I started thinking about a particular thought and the moment I could see the feedback in my program.

I assume this is because the machine learning algorithm used in the background receives data from the device in real time and needs samples for a certain amount of time before being able to classify the current thought based on the thoughts previously trained.

This does impact the type of application you can build with the sensor. For example, building a meditation app would be ok as the latency would not have a major impact on the user experience, however, if you want to build a thought-controlled wheelchair, you can imagine how latency could have a very important impact.

Invasive vs non-invasive

EEG devices are great because you don’t need any surgery; you just put the headset on, add some gel on the sensors and you’re ready to go! However, the fact that it is non-invasive means that sensors have to track electrical signals through the skull, which makes this method less efficient.

The temporal resolution is really good as the sampling rate is pretty fast but the spacial resolution is not great. EEG devices can only track brain activity around the surface of the scalp, so activity happening a bit deeper in the brain is not tracked.

Social acceptance

Wearing a brain sensor is not the most glamorous thing. As long as the devices look the way they do, I don’t think they will be adopted by consumers. As the technology improves, we might be able to build devices that can be hidden in accessories like hats, but there is still another issue, brain sensors can get uncomfortable after a few minutes.

As an EEG device is non-invasive, the sensors have to apply a bit of pressure on the scalp to track electrical signals better. As you can imagine, this slight pressure is ok at first, but slowly becomes uncomfortable over time. Moreover, if a device needs some gel applied on all sensors, this is an additional barrier for people to use it.

Even if the current state of EEG sensors does not make them available or appealing to most people, there are still some interesting possibilities for the future.

Possibilities

— -

If we think about the technology in its current state and how it could be with future advancements, we can think of a few different applications.

Accessibility

I would love for brain sensors to help people with some kind of disability live a better life and be more independent.

This is what I had in mind when I built my first prototype of brain keyboard. I know the prototype is not finished at all but I was really interested to see if a general consumer device could help people. Not everybody has access to complex medical systems and I was really excited to see that a more accessible device you can buy online can actually help!

Mindfulness

An application that is already currently the focus of some brain sensors (for example, the Muse) is mindfulness.

Meditation can be difficult. It is hard to know if you’re doing it right. Brain sensors could help people have a direct feedback on how they are performing, or even a guidance on how to improve over time.

Prevention

If brain sensors were used as much as we use our phones, we would probably be able to build applications that could track when certain physiological functions are not working as they should. For example, it would be great if we could build detection algorithms to prevent strokes, anxiety attacks or epilepsy attacks based on brain activity.

Productivity

The same way brain sensors can help with meditation, they could also track the times of day where you are the most focused. If we were wearing a sensor regularly, it would eventually be able to tell us when we should do certain tasks. You could even imagine that your schedule would be organised accordingly to make sure you days are more productive.

Art

I love the intersection of technology and art as a way to explore things I don’t get to do at work. I really think that building creative things with brain sensors should not be underrated as it allows us to explore the different possibilities and limits of the technology before moving on to a more “useful” application.

Combination with other sensors

I recently started thinking about the fact that brain sensors shouldn’t be treated independently. The brain only perceives the world through other parts of the body, it does not see without the eyes, does not hear without the ears, etc… so if we want to make sense of brain waves, we should probably track other biological functions as well.

The main issue with this is that we would end up with setups that would look like this:

And we can be sure nobody would wear that on a daily basis…

Next

— -

A few weeks ago, I bought a new brain sensor, the OpenBCI. My next step is to tinker with raw data and machine learning, so I thought this device was perfect for that as it is entirely open-source!

I only had the time to set it up so I haven’t built anything with it yet but here’s a little preview of what the device and interface looks like.

That’s all for now!

I realise it is a long post so if you did read everything, thank you so much!

I am learning as I go so if you have any comment, feedback or if you wanna share resources, feel free!

Resources

— -

Here’s a few links if you wanna try some tools, or learn more!

Frameworks

Epoc.js — JavaScript framework to interact with the Emotiv Epoc.

Brain bits — A P300 online spelling mechanism for Emotiv headsets.

Wits — A Node.js library that reads your mind with Emotiv EPOC EEG headset.

Brain monitor — A terminal app written in Node.js to monitor brain signals in real-time.

Ganglion BLE — Web Bluetooth client for the Ganglion brain-computer interface by OpenBCI.

BCI.js — EEG signal processing and machine learning in JavaScript.

Useful links

Brain-Computer interfacing (book)

Principals of Neural Science (book)

A Techy’s introduction to Neuroscience — Uri Shaked

Detecting brain activity state using Brain Computer Interface — Viacheslav Nesterov

People

– -