Competing for prime placements in SERPs have a bad reputation for being overly complicated. However, the core fundamentals for making it into the top 3 results on Search Engines, heavily depend on mastering the three essentials:

- Page structure and ease-of-crawlability

- Website performance

- Strong foundations for content creators

Living in the JAMStack world and using a headless CMS does not change the key SEO priorities.

There are well-known SEO benefits of migrating from a Legacy CMS to a Headless CMS in regard to page performance, security, user experience, and delivering content to multiple platforms. Since a headless CMS will not necessarily give you the plug-and-play simplicity of installing a plugin to manage SEO factors, you need to follow a few best practices to kick-start stellar SEO results.

Simply put, the biggest visible difference between a traditional CMS and a Headless one is the ability to edit metadata on the fly. Since a traditional CMS is closely integrated with your domain and has control over how content is rendered, using a platform like Wordpress or Drupal allows you to easily add page titles, descriptions, and other meta tags out of the box.

A Headless CMS, like GraphCMS, does not control the way your content is rendered due to its cross-platform flexibility, which is why this functionality has to be handled differently.

The commonly accepted best practices for on-page and off-page optimization do not change. High-quality original content, proper keyword choice and placement, interlinked content pieces, domain authority, social sharing, and backlinks from reputable sources are guidelines your content team should keep following.

However, good SEO begins long before the editorial team writes and shapes their content. The build and technical implementations of a project are the benchmarks that the content team can expand upon. So, what can your tech team do to get top SEO results with a modern stack when making the move to a Headless CMS?

Ease-of-crawlability and Page Structure

Use the Schema.org structured data markup schema.

A joint effort by most of the major search engines, Schema.org provides web developers with a set of pre-defined properties to enrich their HTML tags. The on-page markup adds structure to content and makes it more understandable to search engines. Richer search results can be delivered.

Search Engine bots work hard to understand the content of a page. Helping them by providing explicit clues about the meaning of a page with structured data allows for better indexing and understanding. For example, here is a LSON-LD structured data snippet provided by Google that helps understand how contact information might be structured:

<script type="application/ld+json">

{

"@context": "https://schema.org",

"@type": "Organization",

"url": "http://www.example.com",

"name": "Unlimited Ball Bearings Corp.",

"contactPoint": {

"@type": "ContactPoint",

"telephone": "+1-401-555-1212",

"contactType": "Customer service"

}

}

</script>

Similarly, if your content is more instructional or enhanced, such as when creating recipes or tutorials, structured data also helps your content show up as featured snippets within a graphical search result. An example would be googling “Apple Pie Recipe,” and instead of seeing a list of results leading you to recipes, you would see a “featured” result on the top, which would either be the recipe itself or a link to a chosen destination that is popular for the same query.

Know your meta tags.

Meta tags are the most essential descriptors of a page’s content. Not visible to the user, they help search engines determine what your content is about. The four key meta tags with their respective recommended maximum text lengths are:

- Title tag (70 characters)

- Meta Description - short description of the content (160 characters)

- Meta Keywords - what keywords are relevant for a page (5-10 keywords)

- Meta Robots - what should search engines do with a page

Since a developer freely designs the content architecture of a website with an un-opinionated headless CMS like GraphCMS, the metatags should be added as String fields to all relevant content models. Content authors can then comfortably add the relevant metadata.

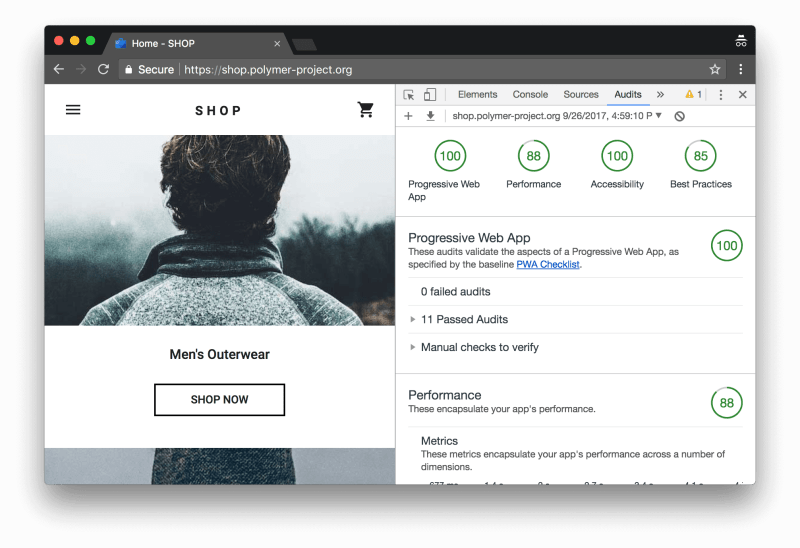

Regularly perform Lighthouse Audits.

Lighthouse is an open-source tool for auditing webpages. It runs a series of tests on your webpage and generates a report with a lighthouse score and a series of recommendations. The audit categories include performance, accessibility, progressive web apps etc.

More recently, a surge in Static Site Generators, like Gatsby and Hugo, have also made a compelling argument for going Headless when starting new projects. When testing Gatsby’s starter out of the box, performance scores are very high compared to starting with basic themes from most legacy CMS, giving new projects a head-start on page performance and optimization.

Website Performance

Using React or Vue – SEO is in your hands.

Although loading speed definitely favors single page applications built with React and Vue, there are a few SEO challenges that can be avoided if you take the necessary precautions.

Adding a component for your metadata is essential. However, a JavaScript-powered web application that renders dynamically on the client-side may not be crawled and indexed at all. Components that are not properly read will be assumed to be empty. Here are some measures to properly manage your metadata:

- To manage the metadata of a React web app, take a look at React Helmet. A component like React Router will make the URL structure of your website more search engine friendly by creating paths between different pages.

- If JavaScript is disabled on the client side, you can look into Isomorphic Javascript technology, which runs JavaScript on the server-side before sending it to the client. Alternatively, a tool like Prerender will pre-render the website and return the content in full HTML.

Use a static site generator

SSGs like Hugo, Jekyll, and Gatsby often take care of the major technical SEO challenges for you. Not only do they guarantee an amazing loading time due to the application being pre-built when delivered, but they also do the heavy-lifting in regard to metadata management and mapping your content. Check out Gatsby’s React Helmet and Sitemap plugins.

Use a CDN

A Content Delivery Network, like Cloudflare, allows you to distribute your content across a wide network of servers, usually distributed globally. When a user makes a call to your website, your website responds with two things: the basic text and data, and instructions to pull the media and/or scripts from the CDN. The user’s browser obeys those instructions and asks the CDN for the media.

Optimize images

Optimize image size in your headless CMS. In the case of GraphCMS, you can pre-define the desired maximum size of your image assets in your GraphQL query. Even if an inexperienced content creator uploads a large image to the CMS, your precautions will take care of fast loading times.

Make use of Lazy Loading of images and video. The most essential content is downloaded first and only when required do media resources get fetched. Page size goes down, page load time goes up.

Use images in the SVG or WebP format. They are vectorized for the best quality and optimized in size for the fastest loading possible.

Add Open Graph metatags for your images. Users get a quick visual summary of your content, so Google loves them.

HTTPS Everywhere

Secure your communication with users by upgrading to HTTPS. Users are more conscious about websites that aren’t secure by default, and with Google marking HTTP websites as insecure, there’s no excuse to avoid adding that layer of encryption and security.

HTTPS websites load much faster, and as we know, page speed plays an important role in ranking. More specifically, HTTPS has been a factor in Google’s ranking algorithms since 2014.

Setting Foundations for Content Creators

Long before a content creator has to publish a piece of content, there are optimizations that the technical team can implement when creating websites. A CMS that encourages you to enrich all your content with the right attributes can be customised to your particular use case, and make it easier for you to implement everything correctly.

Firstly - ensure that all pages follow a friendly URL structure, similar to example.com/page-title-with-keywords rather than something generic along the lines of example.com/pageid1234. SEO friendly URLs are designed to match what people are searching for with better relevance and transparency of where their click leads to. URLs that are keyword-rich and short tend to perform better than longer ones.

Your URL should encourage the inclusion of the target keyword for your page, with the content itself having clearly defined tags like H1, H2, etc. When you include a keyword in your URL, the keyword tells search engines that “This page is about this keyword,” and having meta tags enforce that content correlation strengthen your page’s relevance to the search.

This lets Search Engines know exactly what your content is about, and how relevant it would be for a user searching for those queries.

While searching for “New York Pizza, Google knows to first serve pages that almost identically matches queries:

Secondly, empower your content team to enrich pages as much as possible. The fewer objects a page has, the faster it loads, and the better its ranking preference on SERPs. On the other hand, the more images and media a page has, the better the reader experience with lower bounce rates, with reduced page speeds. Fine-tuning how a website treats assets and optimizing their usage can provide admirable contributions to SEO when done right.

Ensure that assets uploaded by the content team get properly resized and compressed to avoid adverse impacts on page performance. When new posts are created, ensure that the content team is able to provide relevant file names and alt-attributes for the images to follow best practices when being indexed.

Lastly, ensure that pages are easily shareable. We’ve covered the importance of having OpenGraph and Twitter Card enabled meta information, making it easier for links shared to carry forward the correct images and page information. Having pages that can easily be shared via Social Media and Bookmarking sites with images, headers, and titles makes it easier for readers to amplify the reach of a page, allowing for overall improvements in backlinks, referral traffic, and brand awareness - giving extra strength to your pages and increasing their trustworthiness when being ranked.

Final Takeaway

SEO isn’t hard when done right. Laying the technical foundation before the marketing or content team start to create content will give you a headstart in ranking on SERPs when content is created, rather than having to allocate time for technical additions and fixes on the go. Investing time to put technical parameters in place for the content team empowers them to focus on what they do best, and give you the peace of mind of having websites performing optimally for Search Engines. Constantly engage with the content team to understand any changes in SEO factors, and make sure that your website is continually audited and checked for any changes that might impact SEO.

Full disclosure: I work for GraphCMS and this post originally appears on the blog under Headless CMS and technical SEO best practices