It is a fact that software testing is time and resources consuming. Testing the software can be observed from different perspectives. It can be divided based on what we are testing. For example, each deliverable in the project, like the requirements, design, code, documents, user interface, etc., should be tested. Moreover, we may test the code based on the user and functional requirements or specifications, i.e., black-box testing. At this level, we are testing the code as a black box to ensure that all services expected from the program exist, work as expected, and with no problem. We may also need to test the structure of the code, i.e., white box testing. Testing can also be divided based on the sub-stages or activities in testing, for instance, test case generation and design, test case execution and verification, building the testing database, etc. Testing ensures that the developed software is, ultimately, error-free. However, no process can guarantee that the developed software is 100% error-free.

Though manual testing is often responsible for missed bugs, sub-optimal test coverage, and human errors, it is impossible to completely replace it with automation testing, even in large projects. Testing such as UX, usability, exploratory, etc., requires human factors because an automatic tool can’t mimic user behavior. Automated testing doesn’t work for security testing either. Automated vulnerability scanning requires a subsequent manual check because it provides many false positives.

In today’s real-world scenarios, applications undergo frequent changes based on user feedback, web traffic (performance/load), competition, compliance laws, etc. So to stay in the race, there is a need to innovate, upgrade, and enhance your product offerings, making it challenging to automate everything. So what does it mean to implement a complete automated testing strategy? Is it even feasible? And does fully automated QA guarantee quality?

Watch this webinar to learn about enterprise-wide actionable strategies, the dos and don’ts of automation testing and DevOps tooling that will help you improve the release and test velocity.

Try an online Selenium Online Grid to run your browser automation testing scripts. Our cloud infrastructure has 3000+ desktop & mobile environments. Try for free!

Do we need complete automation testing?

Automated testing is not precisely testing; it is checking facts. Checking is machine-decidable; testing requires perception. Testing is an investigation exercise where we aim to obtain new information about the system under test through exploration.Testing (manual) requires a human to make sound judgments on usability. As a result, we can notice irregularities when we were not expecting. In addition, there are other interrelated development activities when implementing a test automation solution than just scripting test cases.

Usually, businesses develop a framework to demonstrate operations like test case selection, reporting, etc.The development of the framework is a huge task and requires skilled developers, and takes time to build. Furthermore, even when a fully functional framework is in place, scripting automated checks takes longer than manually executing the same test.

However, businesses should not be lenient towards one or the other, as both methods are required to get insight into the quality of the application. Businesses spend many hours developing a perfect test automation solution using the best tools and practices, but it isn’t beneficial if the automated checks do not help the team. Instead of aiming to replace manual QAs, we should embrace its advantages to the team. For example, automation testing helps liberate QA’s time for more exploratory testing and will reveal bugs.

Which tests should be automated?

Teams often want to automate everything they can. But that comes back to haunt them as they run into various problems. Businesses should analyze the types of test cases they wish to automate and cases that cannot be automated or should not be automated. Teams should not automate tests just for the sake of it. For example, if you automate a whole set of tests that will require a great deal of upkeep, you are investing additional time and money that you may not have. Instead, it would help if you focused on adopting a risk-based approach, such that you’re only automating the most valuable tests.

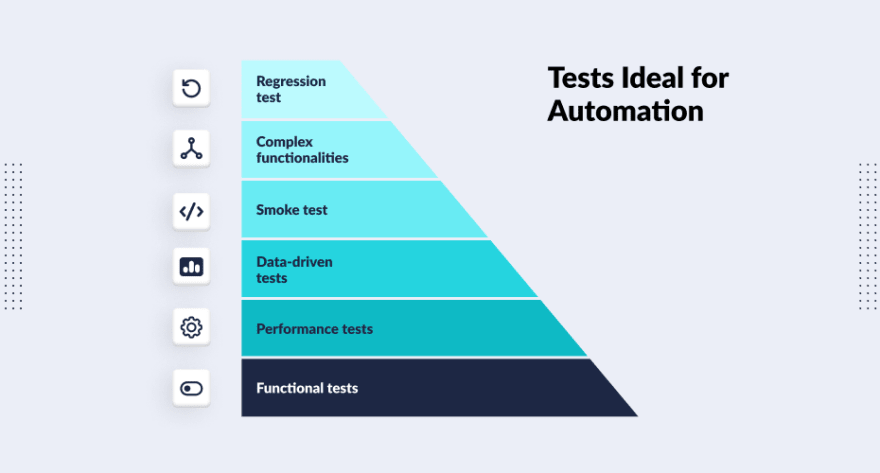

Let’s have a look at the most feasible automation test scenarios:

Regression tests: Regression tests require the same variables to be tested multiple times to ensure the new features do not mess with the older functionality. Regression tests are perfect for automation.

Complex functionalities: Businesses can automate all tests requiring complex calculations.

Smoke tests: The quality of significant functionalities can be verified for the businesses by running automated tests, which will quickly analyze if the functionality needs more testing.

Data-driven tests: Testing functionalities repeatedly with numerous data sets is also a good scenario to consider automation testing.

Performance tests: Tests that monitor software performance under different circumstances are perfect for automation testing. Doing the performance testing for the manual testing team would be incredibly detailed and time-consuming.

Functional tests: Every time a developer needs to perform functional testing that is to be executed quickly for immediate feedback is another example of automation testing that makes sense. It is impossible to achieve this without automation, especially in fast-growing organizations.

Now execute your free automated web testing.

Run Selenium, Cypress, and Appium tests on LambdaTest to scale with the demand of your website and web apps.

Test on Selenium Testing Tool Grid Cloud of 3000+ Desktop & Mobile Browsers.

Problems with automated testing

The crux of the problem is that agile teams are not testing anymore. Manual testing has lost its advantage due to development practices and cultures like DevOps. There is a divide in the QA space — those who can code and those who can’t. Most testers now struggle to keep up with the automation demand. There is pressure to automate tests in every sprint, and there is not enough time for thorough exploratory testing.

The problem in agile development is that testers take a user flow and automate its acceptance criteria. But, while doing so, their primary and only focus is to wrestle with their limited coding skills to get the test passed. So, naturally, this creates a narrow focus when you’re only interested in automating the test and see it getting passed in the build pipeline.

High expectations

Most people in business believe new technical solutions will save the day. Testing tools are no exception! We cannot deny that tools nowadays can solve almost every problem we currently face in test automation. However, this unrealistic optimism also leads to unrealistic expectations. No matter how competent the tool is, if management expectations are unrealistic, it will not meet expectations.Automating unnecessary tests

Don’t automate tests just for the sake of it. Instead, put some thought into the process. Study the high-level and low-level architecture of the product. Ask what can go wrong. Analyze the integration points and look for potential breaking points.

Take a risk-based approach in automation with your overall testing approach. For example, what is the likelihood of something breaking, and what is the impact of the failure? Those scenarios should be automated and executed every build if the answer is high.Flawed security

Just because the test tools do not find any defects doesn’t mean there is no defect in the software. It is crucial to understand that if the tests contain defects, they will bring incorrect outcomes. The automation test will preserve those unsatisfactory results indefinitely.Overlooking important scenarios

A severe issue often leaks into production because no one thought about a particular scenario. It doesn’t matter how many automation tests are executed. If a scenario is overlooked, there will be a bug as per Sod’s law i.e if something can go wrong, it will.

Introducing Test At Scale — a test intelligence and observability platform that shortens the testing durations & provides faster feedback. It enables devs to get early insights into flaky tests and enhances their productivity to ship code frequently with confidence. Start FREE Testing

This Cypress Automation testing tutorial will help you learn the benefits of Cypress automation, and how to install Cypress and execute Cypress automation testing over scores of browsers and operating systems online.

Conclusion

Most test automation engineers spend time battling automation code and getting the tests to work in modern software development. They hardly focus on proper testing and exploring the system.

There is not enough time to write automation code and perform exploratory testing. Teams automate sprint after sprint and forget about the big picture.

Often businesses end up executing tons of automated tests, yet exploratory testing finds the majority of bugs. Instead, businesses should be selective on what to automate based on risk assessment. What might go wrong, and what will impact the business if it does go wrong?

LambdaTest’s test execution platform allows users to run both manual and automated tests of web and mobile apps across 3000+ different browsers, browser versions, and operating system environments. Over 500 enterprises and 600,000+ users across 130+ countries rely on LambdaTest for their test execution needs.