O11yCon 2018: Notes and Observations

Two weeks ago I had the pleasure of attending the first ever o11ycon, a convention focused on "Observability", put on by Honeycomb. Online you'll find a variety of definitions for what "observability" actually is, with some people essentially calling it the next step in monitoring, and most speakers at o11ycon settling on the control theory definition, but essentially all definitions redound at some point to the ability to ask new questions of your system's operational state, without deploying more code. When discussing observability, It's important to remember that observability is a relative quality, with systems being more or less observable, rather than a binary state of correct versus incorrect. Observability is a relatively new concept in the ops space, so when I saw the opportunity to check out o11ycon and learn from some leaders on this nascent subject, I jumped.

The following is a (very rough) chronological account of the days events, along with my observations and musings on the talks and discussions held. I did my best to lend some structure to what are essentially my notes from the day, but rather than agonizing on how to fit a jam-packed day that covered everything from talks on debugging AWS cost spikes live, to group discussions on building a culture of trust, I figured I would let this post serve as a survey of the subjects covered which readers can then supplement with videos once the recordings of talks are posted. While I did my best to document what I considered to be the insightful parts of the day, a key highlight of o11ycon were the free-form "Open Spaces Style Discussion" breakout groups, which were not recorded and hard to transcribe. I can't convey the dynamic of these groups well-enough in writing, so all I will say is that if you get a chance to go to the next o11ycon, you definitely should, and specifically for the OSSD groups.

Charity Majors

We kicked off the day with Honeycomb co-founder and CEO, Charity Majors. If you're familiar with Charity from Twitter, you'll know I am not exaggerating when I say that her (maybe) 15 minutes on stage managed to be outsizedly dense on wisdom and laughs.

She talked about how, going forward, observability must become a goal for software engineers in order to maintain their own sanity. Engineers are continuously being asked to do a large variety of work and wear many hats, be they front-end, back-end, ops, or design, all while being paid the same amount and having the same number of hours in a day. As our systems become more distributed and more complex, the amount of time devs spend debugging one-off production issues could grow to eclipse actual productive development time, unless we level up our tooling and instrumentation to meet the challenge. Moreover, because systems are becoming more complex, the types of failures we face will come to live predominantly in the long and narrow tail of error distribution, which means leaning on tests and TDD won't save us. The cost of testing for these types of bugs already eclipses the cost of any one of these issues individually, and no testing can be abstracted enough to catch them all in an efficient way.

Charity also touched on the fact that Observability, or even rudimentary visibility into production in some settings, is still an incredibly new concept. Her hope is that newness will make it a great entry point for fresh ideas in production software operation, free of the orthodoxies that existing approaches accumulate over time. The key "stick" from the first presentation was that, in order for dev teams to keep their heads above water as systems become more fragmented and complex, developers must learn to build operable software. The key "carrot", on the other hand, was that observable systems free engineers from the drudgery of blind development, and even enable us to propose business goals based off the deeper insights we can derive. In 2018, pretending to have "vision" when you don't even have visibility into your business, is a losing bet.

Favorite Quote:

It's 2018, if you're SSHing into a box, you've already screwed yourself.

Christine Spang, "Why O11y Matters"

Next up was Christine Spang, founder of Nylas and recent dev.to AMA star. Spang quickly reviewed the state of the industry from an architecture perspective, giving the audience a basic rundown on the move from monolithic, LAMP stack-style applications to today's more decoupled, service-oriented affairs. One of the most interesting points from this quick historical recap was Spang's quick emphasis that, despite the fact that the term microservice has come to dominate the industry*,* many of us are not running true "microservices", and that a distributed system never necessarily had to be a microservice-based one. The point of that distinction was to clarify that even if your services are maybe a little chunkier than you feel comfortable admitting, even if you're air-quoting "microservices" when you describe your architecture, improved observability can be a game changer when running in production, because it acts as a tool for counter-siloing and inclusivity on your developer team. That's because higher levels of observability:

- Enable all developers to gain similar insights to the longest-standing veterans on your team

- Take the burden of memory and documentation off those vets, and

- Open up on-the-ground questioning of user behavior to your greater organization

The next portion of Spang's talk focused on practical steps to achieving more observable systems. The first step she recommended was to move to a structured logging approach, which was the subject of some debate the rest of the day, given the challenge of enforcing a logging style across an organization. What I loved about every talk at O11ycon was their emphasis on a reasonable, incremental, and data-driven approach to improving systems. Build it this new way, try running it, and measure how much happier you are versus your older code. In that vein, Spang's second low-lift recommendation was attaching all events in a given codepath to the given request's context attribute; an approach which was co-signed by most of the day's speakers. That approach works great if you're using Flask like Nylas does, and you have access to a global request object. Later that day I brought up that in my Rails codebase I didn't have access to such a global (to my knowledge), for which Honeycomb's Ben Hartshorne had the killer tip to use Ruby's thread-local variables (accessible through Thread.current[:var]) as an event store. Every time a request crosses some self-defined threshold, you can store the entire context of that execution in these record store, and then easily dump out and sample those records at a later time. Moving to this more observable approach allowed Nylas to start looking at some failing requests they were experiencing base on which expression would break, and then drill all the way down to a problematic DB shard when they noticed inbox syncing acting up. One great takeaway from Nylas' approach to observability was that they are now trying to use it to drive technical onboarding and architectural knowledge, because their new devs are easily able to just ask what systems are actually doing when reviewing architectural choices.

Favorite Quote:

Sofware is opaque by default

Breakout Sessions

Probably the coolest part of O11ycon were the "Open-Spaces-Style Discussions" I mentioned before. Essentially, these were breakout groups centered around crowd-sourced topics from the attendees. The key difference from most breakout sessions was that we were then encouraged to join and leave groups at will, based on whether we felt we were deriving from or contributing value to the conversation being had. It was essentially 20 minutes of brainstorming questions and grouping them into themes, and then an hour of discussions where if you did't feel interested in the meeting, you could feel free to leave and find one you liked more.

Breakout Session #1, Serverless and O11y

I decided to join a discussion group on achieving observability in heavily or solely Serverless systems. Before I broke off to join a group on "Starting with Observability", much of the discussion among the SLS crowd was centered on the inherent opaqueness of Serverless approaches. It seemed like this opaqueness was mostly due to vendor-side decision making, but I also had a feeling that these types of problems are inherent to a platform where a key goal is knowing as little as possible about the execution environment . To me, it seems like opening up visibility into resource utilization and the presence of noisy neighbors would be a vendor value-add when choosing a serverless platform, but I can see why companies like Amazon and Google don't want to offer something like that, because it could also lead to stricter SLA enforcement and higher operating costs on their end. Funnily enough, just as I was joining my second discussion group for the first batch, the phrase "Amazon's SQS [Simple Queue Service] is about as black box as it can get, and they probably won't be opening it up." was the first thing I heard, which left me doubtful that we might see more instrumentation exposed on Serverless platforms in the near future.

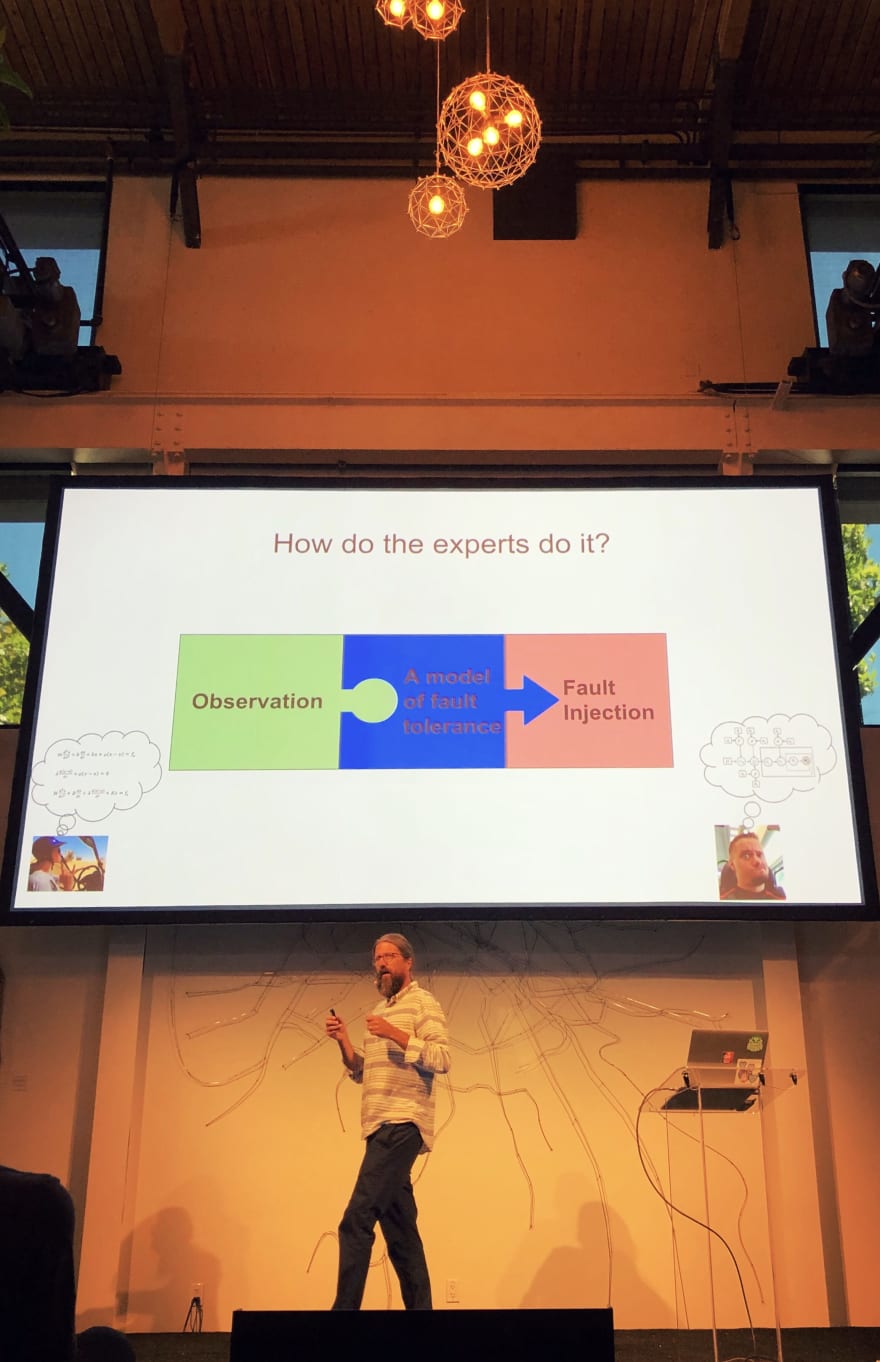

Dr. Peter Alvaro, "Twilight Of The Experts"

I really don't feel I can do this next talk justice, so I decided to post it separately to keep this writeup a little shorter. You can find my write-up here, and I'll update this post with a link to the recording when it's up.

Favorite Quote:

If you have any take-aways from today's talk, the top three should be:

- Composability is the last hard problem

- Priests are human

- We can automate the peculiar genius of experts

Emily Nakashima, Instrumenting The Browser

Emily's talk, which I again will encourage you to catch at o11ycon.io, is best summed up in my mind as observability finally fulfilling the "promise of analytics" for the frontend. I think when most fresh devs hear about front-end analytics, they assume that that data will be actionable to a level of granularity that is rarely achieved, and don't realize that most of the time it's more useful to business stakeholders than anyone building a product. Emily, with a guest appearance from fellow Honeycomber Rachel Fong, took the audience through the instrumentation of Honeycomb's frontend, and described a few problems they solved. Without diving into the details too much, they essentially highlighted the dearth of telemetry available to traditionally instrumented applications. Because this post is essentially a recap of my notes, I'll just detail my key takeaways:

- You can't set goals if you don't instrument, because you won't know anything about the current state other than "bad", and will have no context for what "good" looks like

- Infrastructure speed doesn't matter if you don't pass that on to your users

- Even if you don't use Honeycomb, you can get started with event emission by sending events wherever you send logs, which should be able to handle at least some level of multi-dimensional querying, or to your error or monitoring services.

- Rachel shared a story of a solution she was pushing for that she realized was over-engineered only after realizing she hadn't established a problem and success metrics before-hand

Favorite Quote:

"Can we be systematic about building solutions if we don't have sufficient visibility into the problems?"

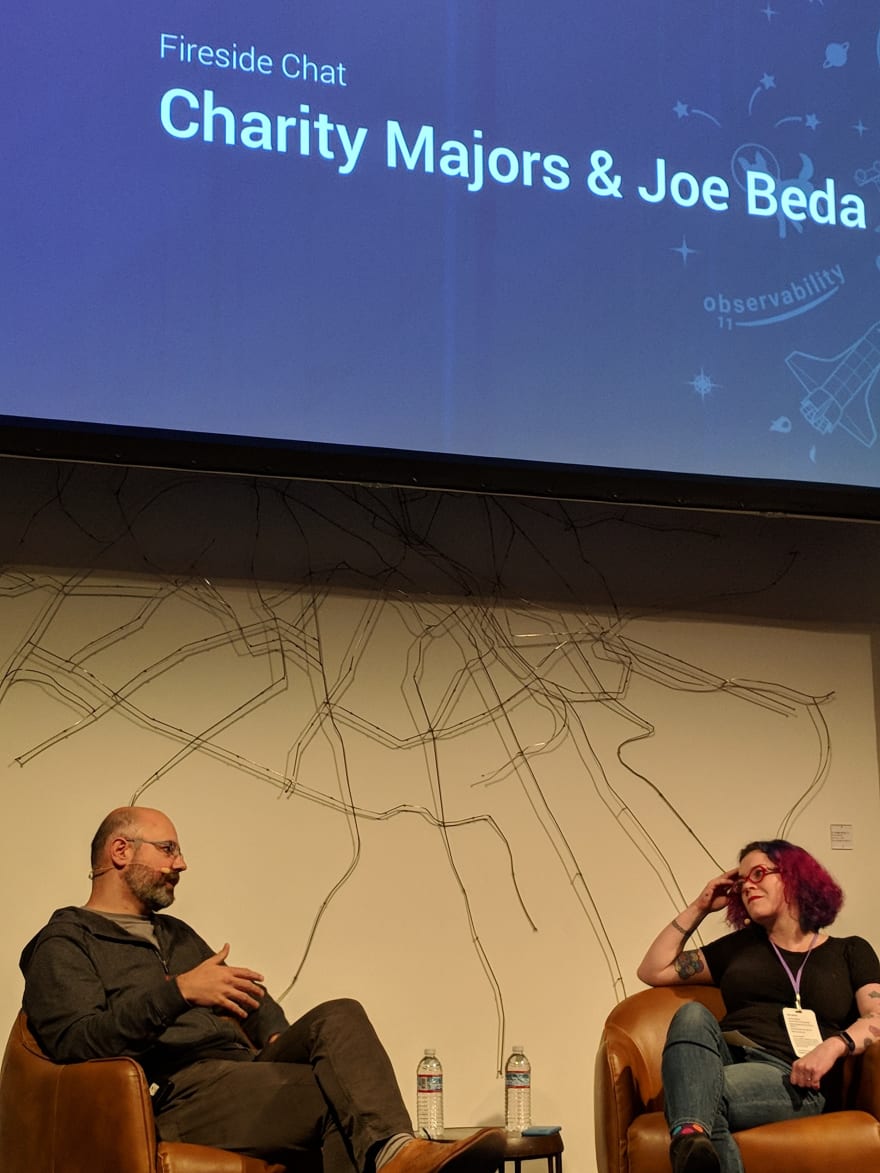

Charity Pt. 2, Ft. Joe Beda, CTO @ Heptio

At this point I am giving up any sort of narrative structure :D

These are my key takeaways from this talk:

- The key to effectiveness isn't just the power to effect change, but insight into what is wrong and the state of your organization's world. You can have all the power in the world and not know what to do with it to effectively bring about change.

- These tools aren't a cure-all, and bringing engineers who traditionally haven't been on call into the rotation will hurt, but the shape of your paging rate should go down and stabilize

- You can't predict production, which means you can't proactively monitor or test everything

- Kubernetes provides a solid bridge between solutions like Serverless and operating your own infrastructure, as opposed to the old solution of transitioning off Heroku to a VM, where the difference in knowledge needed to operate the application was much higher

- Adding monitoring and other sorts of reactive plumbing is writing software that doesn't move the bottom line, and writing software that doesn't move the bottom line is bad business at small scale

- As a startup, you get maximum 2 "innovation tokens" where you get to invent something new that fuels your business, past that it's about executing sustainably

- "Never try to build or architect for more than 10x the load you have right now"

Favorite Quote:

DESTROY DRUDGERY,

UNLOCK HUMAN POTENTIAL,

MINIMIZE "HAVE TO DO"s

Breakout Session #2, Transforming Culture Towards Observability

I am going to be honest, I took no notes during my second breakout session, but it was an amazing discussion that yielded the following quote:

“Observability enables scientific-method driven development” @o11ycon22:31 PM - 02 Aug 2018

Essentially we talked about how dev and ops are disillusioned with management's unwillingness to invest or allow for investment in tools, but that that feeling also runs the other way, where management is reticent to invest if they see no business value, and so developers need to balance bottom-up improvement with lobbying for top-down support, and sell the value of observability tools for business roles. In my mind this boiled down to developers needing tools as robust for their roles as Business Intelligence tools like Tableau are for product managers, analysts, and sales people. This barely captures the great conversation the group had on a beautiful sunny rooftop, so.... go to O11ycon next year :).

Breakout Session Recap Presentations

To finish off the day, several presenters came up to recap what their breakout groups discussed. Here were my key takeaways:

Group 1: Testing In Prod

- Release and deployment are just another step in testing your products, nothing other than production will ever properly reflect production conditions

- Decouple deploy from release

Group 2: Making Observability Easier

- Rule of thumb: Observe at "service" boundaries

Group 3: Tying Observability To Business Goals

- Use the same data to drive towards business goals that you would use to drive towards technical goals, show that there's value in even more granular data

- Create customer stories around your own (dev/ops) use-cases, and around those of business partners

Group 4: Observability Driven Development

- Observability diminishes suffering and uncertain angst in the development process, because sufficient levels of observability mitigate unknowns about production environments

- Should there be a scale or index for levels of observability?

- How do devs balance timeliness of data and querying vs volume, what rate do we sample at?

- Observable systems create a bulwark against "alert fatigue", you can monitor for less when you include context on long-tail events

Big takeaway from @o11ycon: observable systems diminish “nervous coding”00:09 AM - 03 Aug 2018

Aaaand that's it, those are my notes and takeaways from O11ycon, a little fleshed out, a little clunkily explained, but at least put to paper. I'll be trying to write more frequently (I say this every time but maybe this time I mean it), so if you're interested in some more carefully written stuff from me, feel free to hit that Follow button and to follow me on Twitter.