Prompt engineering is a vital aspect of working with large language models like ChatGPT. It involves creating effective prompts that lead to accurate and useful responses. Prompts are questions or instructions given to a large language model (LLM) to obtain a specific output.

Whether you are a software developer, data analyst, or business professional, understanding prompt engineering can help you get the most out of your LLM.

LLMs are powerful machine learning models trained on vast amounts of text data. They produce high-quality text responses that are often indistinguishable from human-written responses. These models can perform various tasks such as content generation, content classification, and question answering.

Why should prompts be effective?

Effective prompts are crucial to get the best result from LLMs. The text input guides the model’s response in the right direction and allows us to specify the information we need. A well-crafted prompt with the right keywords and conditions can extract specific information from the model, such as the capital of a country.

Conversely, a poorly constructed prompt can lead to inaccurate or irrelevant information. Therefore, it is essential to structure the prompt thoughtfully, choosing the right words and phrases to direct the model’s response.

By doing so, we can ensure we receive the desired information from the LLM.

Criteria for good prompts

Let’s discuss the top 10 characteristics of a good prompt:

1. Relevancy

The prompt given to an LLM should be clear and specific, providing enough information to it to generate the desired output. Ambiguity in the prompt can result in inaccurate or irrelevant responses.

2. Context

The context in prompts refers to information included in the request that provides a better framework for producing the desired output. Context can be any relevant details, such as keywords, phrases, sample sentences, or even paragraphs, that inform the LLM. Providing context to your prompts allows the model to generate more accurate and relevant responses.

3. Tailored to the target audience

The prompt should be tailored to the target audience to ensure the generated text is appropriate and relevant. For instance, prompts designed to produce technical writing should differ from those designed for creative writing.

4. Designed for a specific use case

The prompt should be created with a specific use case in mind. Include details about where and why you are going to publish the material. These will influence the prompt’s tone, language, and style, therefore affecting the output.

5. Include high-quality training data

The quality of the generated output is directly related to the quality of any training data included in the prompt. High-quality training data can help ensure that the LLM generates accurate and relevant output based on the prompt.

6. Proper wording

The wording in the prompt is essential because it plays a crucial role in determining the quality and accuracy of the generated output. The wording of the prompt should be clear, specific, and unambiguous to ensure that the generated text is relevant and appropriate.

Levels of improving the wording

Let’s see some examples of improving the wording in prompts to get accurate results:

- Vague wording : Generate a program that takes input and produces output.

This prompt should be more specific and provide specific information about the expected input or output. Otherwise, it will lead to irrelevant or inaccurate responses from the model.

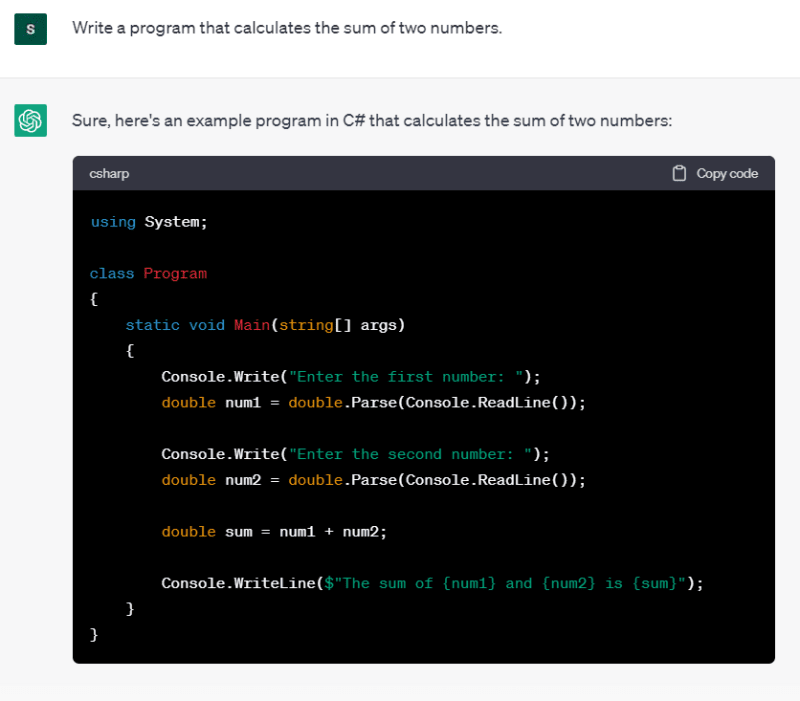

- Clear wording : Write a C# program that calculates the average of three numbers and displays the result.

This prompt is clear and specific, providing a clear task for the model. It includes relevant keywords, such as C# and average , to give context to the prompt.

- Technical wording : Develop a C# console application that implements a bubble sort algorithm on an array of integers.

This prompt is tailored for a technical audience and requires the model to know C# programming concepts and algorithms. It also includes technical terms such as console application and bubble sort algorithm.

- Creative wording : Create a C# program that tells a story using randomly generated words.

This prompt is designed for a creative writing use case and requires the model to generate imaginative and engaging text. It includes the keyword C# to provide a specific use case for the model.

- Domain-specific wording : Write a C# program that calculates the financial data for a retail store, including revenue, expenses, and profit margins.

This prompt is tailored for a specific use case related to financial data analysis. It includes relevant domain-specific terms such as revenue , expenses , and profit margins to provide context for the model.

7. Apply formatting

Formatting is significant in the prompt submitted to LLMs as it can impact the generated output. Formatting your prompts with bullet points, numbered lists, and delimiters will give your prompt more potential. If the prompt is not properly formatted, it can result in unclear or incomplete information being fed into the model, leading to irrelevant or inaccurate responses. Additionally, formatting can help improve the prompt’s readability and overall quality.

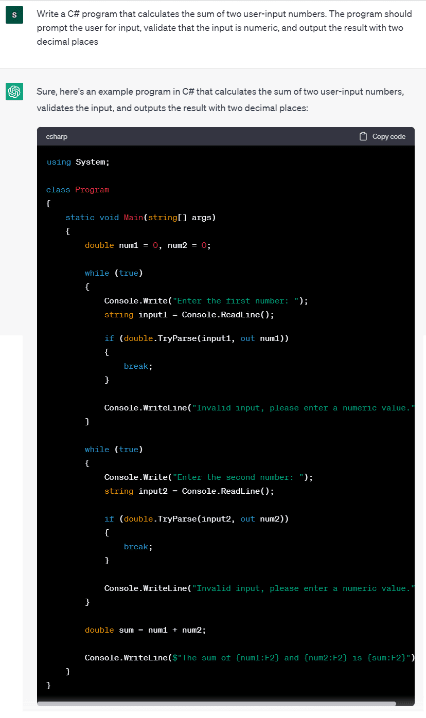

8. Provide conditions

Conditions in prompt engineering refer to adding additional information or constraints to the prompt to guide the generated response in a specific direction. They may be specific keywords, guidelines, or instructions for the model to follow in producing content. Overall, conditions allow for greater control over the generated output and can help improve the quality and relevance of the result.

9. Appropriate vocabulary

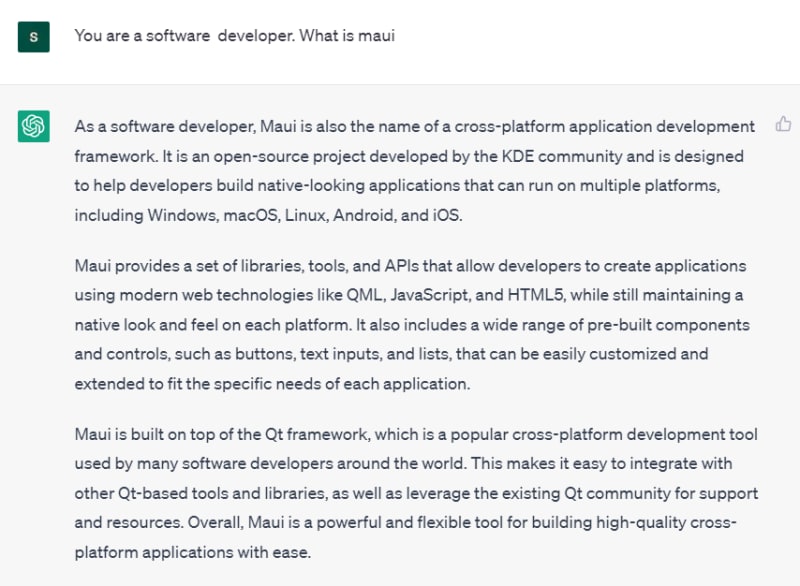

Using industry-specific terminologies, jargon, or technical terms can help improve the relevance and accuracy of the generated text for a specific use case. For example, Maui generally means the island, but from a developer’s perspective, it means the cross-platform domain from Microsoft. So in the prompt, a developer would have to use .NET MAUI instead of Maui. However, balancing this with simplicity and clarity is important to ensure the target audience understands the generated text. Additionally, incorporating feedback from users and domain experts can help refine and improve the vocabulary used in LLM prompts.

Examples :

- Technical writing prompt: “Write a detailed user manual for ……….”

- Creative writing prompt: “Write a short story about ……….”

- Academic writing prompt: “Write a research paper on ……….”

- Marketing copy prompt: “Write persuasive ad copy for……….”

10. Provide a role

Assigning a role to the LLM in the prompt can help establish expectations for the generated text’s tone, language, and style, making it more consistent, professional, and ultimately useful.

Examples

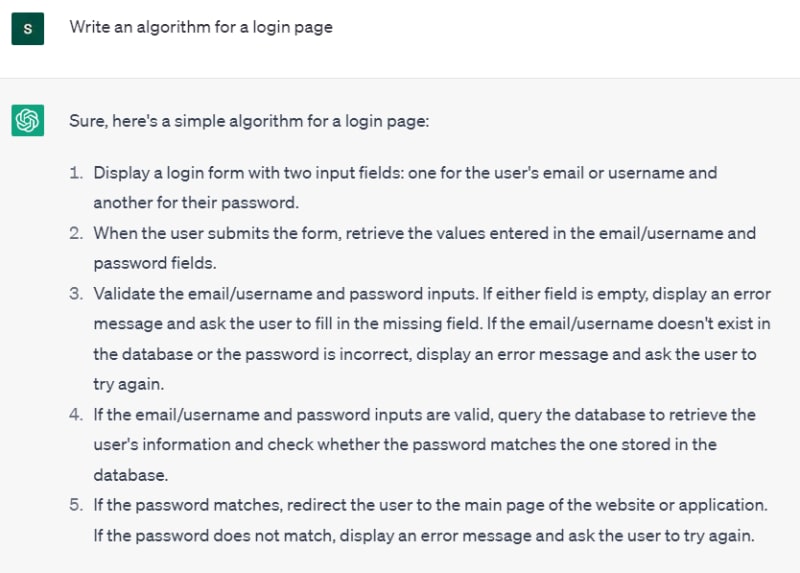

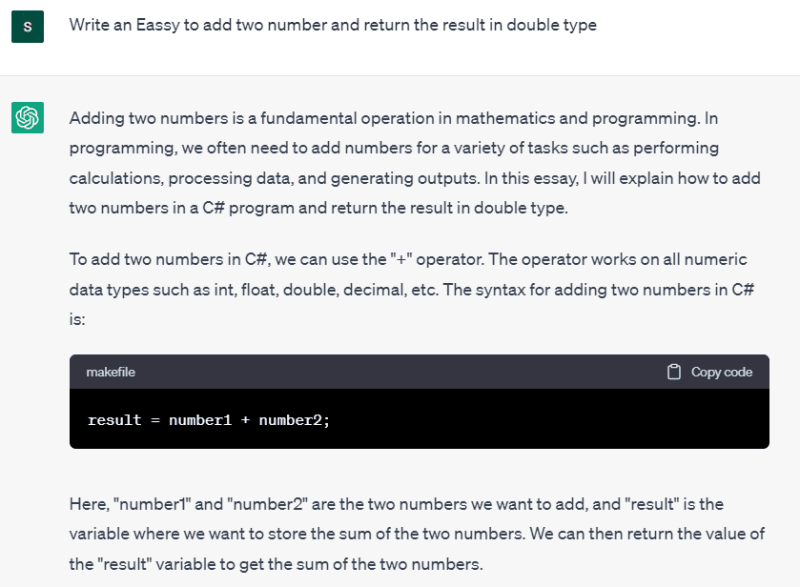

Let’s see some examples that use the prompt criteria we’ve covered. These examples all use ChatGPT as the LLM.

Conclusion

Thanks for reading! I hope you found this blog helpful in understanding the importance of prompt engineering for LLMs like ChatGPT to get accurate results. Try out the tips listed in this blog post and leave your feedback in the comments section below.

Syncfusion provides the world’s best UI component suite for building robust

web, desktop, and mobile apps. It offers over 1,800 components and frameworks for WinForms, WPF, WinUI, .NET MAUI, ASP.NET (Web Forms, MVC, Core), UWP, Xamarin, Flutter, JavaScript, Angular, Blazor, Vue, and React platforms.

Current customers can access the latest version of Essential Studio from the License and Downloads page, and Syncfusion-curious developers can use the 30-day free trial to explore its features.

You can contact us through our support forums, support portal, or feedback portal. We are always happy to assist you!