The 3rd edition of Nx Conf, this year live from New York City. If you missed the talks, no worries, we've got you covered. This article does a brief recap of all the presentations and has links to the individual recordings and slides.

Table of Contents

1. Keynote

2. Nx Cloud Workflows: Next-Gen CI with First-Class Monorepo Support

3. United by Nxcellent DX

4. Redefining Projects with Nx: A Dive into the New Inference API

5. Package-based to Integrated: One Small Step or One Giant Leap?

6. Nx't Level Publishing

7. Lightning Talk: What if your stories were - already - your e2e tests?

8. Lightning Talk: Nx Cloud Demo

9. From DIY to DTE - An Enterprise Experience

10. Vanquishing Deployment Dragons with Nx wizardry

11. Optimizing your OSS infrastructure with Nx Plugins

12. Level Up Your Productivity with Nx Console

13. That's a wrap

Keynote

Speaker: Juri Strumpflohner & Victor Savkin

Slides • Watch on Youtube

Juri Strumpflohner (me 😅) opens the keynote. He focuses on the Nx ecosystem, how it is much more than just monorepos by helping you integrate your framework of choice (whether that is Angular, React, or Vue) with tooling such as ESLint, Playwright, Cypress, Webpack, ESBuild, Vite etc.

Such integration capabilities really help push the developer productivity, whether that's in single-project workspaces or monorepos.

Juri also dives deeper into efforts from the team to provide high quality educational content around Nx and its capabilities. The Nx docs have been restructured to follow the Diataxisframework, dividing content into into learning-, task-, understanding- and information-oriented sections.

This makes it easier to find keep the content organized and focused and makes it easier for the reader to choose between solution-oriented recipes vs learning-oriented tutorials.

The Nx team not only produces written content, but also video content mainly on the Nx Youtube Channel. Juri shows some of the growth stats, with the channel now having more than 14k subscribers and around 3.7k hours of watch time per month. The channel serves mostly short-form videos about new releases, highlighting new features as well as longer-form tutorial videos.

The Nx Community also got a special place in the keynote. Juri highlighted in particular the transition from the "Nrwl Community Slack" to the new "Nx Community" on Discord.

Discord is built for managing communities and will open up a series of features to come. Things like a built-in forum to ask questions, the ability to highlight Nx events (e.g. the Nx live stream) and having powerful automation and moderation tools. Since the launch a couple of weeks ago, already 1500+ members have signed up. If you didn't sign up yet, go to https://go.nx.dev/community.

Juri also highlighted the Nx Champions program that got launched this year which helps with efforts to grow the Nx community. The program features members that stood out in the Nx community by helping support others, producing content or speaking at conferences about Nx and related tech.

Finally there were also two announcements:

- the Nx team decided to move off Medium for a better publishing experience but mainly also to avoid the paywall, something that the team has no control over. The new blog is currently being built and will be hosted at https://nx.dev/blog. This also allows to better resurface information by being able to integrate blog articles into the Nx docs search and make them also accessible to the Nx AI Assistant which was the 2nd announcement.

- the Nx AI Assistant is an experiment the team is running to use AI to improve discoverability on the Nx docs. The ChatGPT powered assistant allows to ask natural language questions and responds based on the Nx docs training data, also including links to the sources. Try it out at https://nx.dev/ai-chat and use the feedback thumbs-up/down buttons to help improve it over time 🙏.

Also, Nx is open source: https://github.com/nrwl/nx. Contribute! And while you're there, don't forget to give us a star 😃.

Victor Savkin began the second segment of the keynote discussing the hidden expenses that often plague large teams.

He emphasized that as an organization grows, so does the supporting work. This isn't limited to just communication and management. Every artifact, be it code, process, or otherwise, needs to be assimilated into the broader context. This supporting work becomes intricate and demanding. He pointed out that the remote work trend, while it started with a lot of momentum, struggles in larger corporations because it demands even more coordination and effort. In contrast, smaller entities often outshine their larger counterparts due to the reduced overhead of supporting work.

One of Victor's main points was the potential of software developers. Given the right tools and environment, they can achieve remarkable feats. CI is one such tool.

The state of CI configuration, Victor explained, is essentially a meticulous blueprint of tasks for the CI tool. This blueprint defines everything from the number of machines to their precise roles. Historically, these processes were performed manually, with individuals running test cases and subsequently approving or rejecting them.

However, scaling CI is a challenge. In larger workspace, up to 50 agents is pretty common, all requiring careful coordination and configuration to ensure tasks run in the correct order.

This might be manageable in a static repository setup, but monorepos introduce an added layer of complexity. Here, static "CI recipes" just won't cut it. The result is either an overuse of computational resources, leading to waste of money, or a painfully slow CI.

Victor critiqued that most current CI setups are:

- Oriented towards machines

- Low-level in their configuration

- Maintenance-heavy

- Detached from developer intentions

- Challenging to implement with monorepos

The big question: how can we revolutionize CI? Victor then showcased a demo workspace and highlights where the complexity of setting up CI comes from:

Even running e2e tests on CI requires:

- Building the application to be tested to produce an artifact

- That usually requires first building all libraries the app depends on, in the correct order

- Once all libraries are built, the application itself can be build

- Finally e2e can be run on the application artifact

Notice, since we want to do this as fast as possible, we want to parallelize these operations across machines. This involves also taking care of transferring build artifacts between them.

This is where Nx Cloud Workflows shines. It enables developers to draft CI configurations at a far more abstract level. Instead of delving into the minutiae of tasks, developers specify what commands to execute, with the how being implicitly managed by Nx Cloud.

env:

NODE_OPTIONS: '--max_old_space_size=4096'

setup:

- name: Git Checkout

uses: 'nx-cloud-steps/checkout'

- name: Npm Install

uses: 'nx-cloud-steps/npm-install'

steps:

- name: CI Checks

parallel-scripts: |

nx affected -t build e2e --parallel=1

nx affected -t test lint --parallel=3

This elevated abstraction is possible because Nx Cloud & Nx are intimately familiar with the workspace, understanding the interdependencies between projects and tasks.

Beyond simplifying CI configurations, Nx Cloud Workflows helps optimize computational resource use. Agents are instantiated dynamically based on the project's requirements.

Victor highlighted that most current CI setups:

- Consistently utilize a predetermined number of agents, irrespective of the actual needs of the PR

- Possess non-reusable agents since each is tailored to a specific task, like building or testing

- Struggle with granular retries

As a metaphor Victor mentions that Nx Cloud Workflows is to CI what S3 is to file uploads.

Sounds interesting? Watch the entire talk here.

Nx Cloud Workflows: Next-Gen CI with First-Class Monorepo Support

Speaker: Simon Critchley

Slides • Watch on Youtube

Simon Critchley (Senior Software Architect at Nx) dives into the more technical details of the newly announced Nx Cloud Workflows (which is in private beta as of this writing).

Apart from the distributed caching, Nx Cloud already offers DTE (Distributed Task Execution), which is a mechanism to seamlessly distribute tasks across various machines to achieve a high degree of parallelism. Up until now it was on you to configure your existing CI in a way to provision machines (agents) to Nx Cloud DTE. This required some fine-tuning to understand the best level of parallelism given the underlying monorepo and tasks that need to be run. If you have too many machines, it'll be wasteful, if you have to few your CI will be slower.

Nx Cloud Workflow is an advancement of the DTE mechanism, where machines can be automatically provisioned and scaled based on the tasks that need to be run. Essentially it is a replacement for your existing CI but way smarter because it is able to leverage the knowledge it has about the Nx workspace, historical data and can thus do a series of optimizations. Traditional CI systems are running low-level commands on machines. Nx Cloud Workflows is task oriented instead: you tell it to run builds, tests, e2e, linting on the affected projects of the Nx workspace and it figures out how to split them up into fine-grained tasks and then distributes them among dynamically provisioned agents.

From a developer's perspective, Nx Cloud Workflows are written in Yaml (or alternatively in JSON which is handy if you need to generate them). The format is very similar to existing CI providers to make sure the knowledge you have easily transfers. If you want an example, we're dog-fooding Nx Cloud Workflows on the Nx repo (note the config will change as the product progresses): https://github.com/nrwl/nx/blob/master/.nx/workflows/agents.yaml

Simon then dives way deeper into the underlying architecture. In particular, how scheduling in Kubernetes works, which is used at the infrastructure level for Nx Cloud Workflows.

Workflow scheduling is handled by Kubernetes, specifically through a "Workflow Controller" that interacts with the K8s API Server and triggers Kubernetes Pods. Each Pod contains a Workflow executor and sidecar containers. All containers in the Nx Cloud workflow share a workspace volume for collaborative access. This allows for interesting optimizations in terms of sharing node_modules and restoring cache results.

The Workflow executor, written in Go, is responsible for receiving and executing workflow steps, capturing output, buffering logs, and communicating with the Workflow Controller. It also exposes its own grpc API for distributed locking, file system cache operations, and setting output parameters for each step, ensuring efficient workflow execution.

Nx Cloud Workflow is in its early development phase. The Kubernetes scheduler supports Docker based executors (basically any Docker image can be used as build image).

Windows, macOS and Linux is currently in development which will use a Cloud VM scheduler on the cloud of your choice.

United by Nxcellent DX

Speaker: Michael Hladky

Slides • Watch on Youtube

Michael talks about how to leverage Nx to migrate multiple repositories into a monorepo to streamline development and increase developer productivity. He goes through

- how a project gets started

- how to prep moving to a monorepo from a polyrepo situation

- how to do the move in parallel

- how to measure the progress

The project usually starts with an architectural audit. That involves a detailed analysis of the underlying codebase, also including an executive summary for non technical folks. Once the audit is done, the actual move is planned and prepared. A part of that is to define "Nx migration goals", like

- Single Version Policy

- Improved Maintenance

- Shared Infrastructure

- Shared Architecture

- Efficient Task Runs

- Frictionless Code-Sharing

- Frequent Deployments

- Decouple Deployment

To prioritize which ones to address first, Michael uses the following metaphor:

The important part here is to understand why "apples get bad" in the first place. Is it the harvesting process?

Once the priorities are defined and roadmap laid out, the goal is to move in parallel.

As part of that move the following gets produced:

- Migration Guide

- Training Program

- Communication Strategy

- Impact Measurement

The migration guide defines where to start, what the company goals are, how the deliveries will be integrated into the existing process and how the interaction with the various teams will happen.

The training program is there to tackle the bottleneck of missing knowledge, right from the beginning. Each stage of the process will have a different training content and collect feedback.

Finally the impact measurement; Nx Cloud already has graphs to measure how much time got saved in CI due to the improvements made. Michael mentions they go further, also measuring communication and productivity.

An interesting part is also how they perform the repository synching (from polyrepo to monorepo). They leverage an Nx plugin that

- contains shared build logic

- has rules to run that produce actionable feedback about what is needed to sync/align the polyrepo repository s.t. it can be merged into the monorepo

- it also allows to track progress and produce according reporting of where the company is at

Sounds interesting? Watch the entire talk here.

Redefining Projects with Nx: A Dive into the New Inference API

Speaker: Craigory Coppola

Slides • Demo Repo • Watch on Youtube

Craigory did a deep dive into the new Nx inference API. This is particularly interesting if you're developing Nx plugins or if you have leverage/build some more advanced automation for your Nx workspace.

What is project inference

- how Nx reads your project configuration

- introduced between v13.3 and v14

- initially to support package-based monorepos which use package.json scripts rather than

project.json. Generalizing the project config reading mechanism also allowed to define an API that community plugins can leverage and which allows to integrate even languages outside the JS ecosystem into Nx (e.g. where projects are just defined differently, such as .Net, Java, Python,..)

Inference API v1

-

projectFilePatterns- identify files that represent the root of a project -

registerProjectTargets- takes a project file and converts to a list of targets that Nx knows how to run

Shortcomings have been

- strict 1-1 mapping between project files and projects

- the logic of finding proj files and targets had to be decoupled which introduced potential failure points

- no way to add dynamic metadata to a project

Project graph API v2

-

createNodes- finds graph nodes based on files on disk -

createDependencies- finds edges to be added to the graph

Still 2 parts, but there's no overlap between these two and they have very specific purposes.

createNodes is a tuple composed of

projectFilePattern-

CreateNodesFunctionIt can return a map of projects and external nodes, so there's no more the shortcoming of a 1-1 as it was in v1 API. With this new setup multiple plugins might detect the same project. Nx merges the configuration that has been identified. Kinda what happens right now if you mixpackage.jsonandproject.jsontargets in an Nx workspace.

Craigory demoes the inference API by building a spell checking plugin for Nx.

createDependencies replaces processProjectGraph. It is more constrained but mainly because things like adding nodes should be done in a different function now (e.g. createNodes). This has also advantages for Nx itself, as it knows all nodes will have been created after the createNodes has terminated.

This API is still marked as experimental, next steps will be

- mark as stable

- remove v1 API after deprecation period

- plugin authors should start looking into the new API, provide feedback and think about migration scenarios

Package-based to Integrated: One Small Step or One Giant Leap?

Speaker: Isaac Mann

Slides · Repo · Watch on Youtube

Isaac initiated his talk by drawing an analogy between software development and the moon landing. While Neil Armstrong is often singularly celebrated for setting foot on the moon, Isaac emphasized that the monumental achievement was the collective effort of countless individuals on the ground crew, from engineers to support staff.

Isaac: Nx wants to be that indispensable ground support crew for developers, allowing them to push the boundaries of software development.

Currently, Nx offers support for two predominant monorepo styles: package-based and integrated.

Package-based Monorepos:

- These are tailored for flexibility and innovation.

- Packages within this setup can have diverse configurations.

- Every package boasts its individual node_modules and dependencies.

- Crucially, each can be upgraded independently, offering a high degree of autonomy.

Integrated Monorepos:

- These are structured to prioritize consistency and maintainability.

- Updates within this framework are automated, and the setup adheres to a single-version policy.

Isaac then drew parallels between the Apollo program and the package-based mindset. The Apollo missions were evolutionary in nature, constantly pushing the envelope and embracing innovation with each successive mission. However, this trailblazing approach wasn't without its perils, as evidenced by tragic accidents. This experimental approach was feasible because the core team, responsible for creating the setup, remained consistent throughout the project's duration.

In contrast, Isaac likened the International Space Station (ISS) to the integrated mindset. The ISS epitomizes the challenges of coordination, given its international collaboration involving numerous countries. Emphasizing longevity, the ISS necessitates that every new crew member be proficient in operating its intricate machinery.

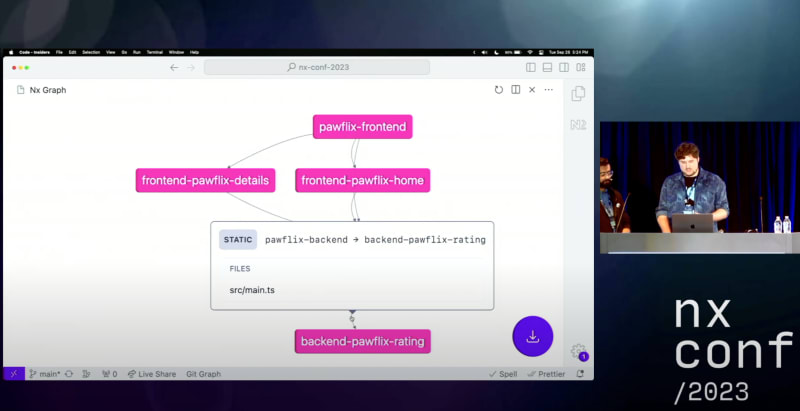

Shifting gears, Isaac delved into a hands-on demonstration. He outlined the process of transitioning from a package-based monorepo to an integrated one, including demoing

- Initializing Nx with

nx init. - Establishing new projects to facilitate type sharing across applications.

- Harnessing the power of the Nx graph visualization to navigate and understand the project structure.

- Employing the module boundary rule, ensuring constraints are maintained in the revamped structure.

- Devising a novel Nx generator, streamlining the process of setting up new libraries within the workspace.

- Finally, he showcased the seamless upgrade mechanism, ensuring the workspace is always aligned with the latest version.

Nx't Level Publishing

Speaker: James Henry

Slides · Watch on Youtube

James Henry unveils the new versioning and publishing functionality that is now built into Nx itself.

Nx has always given you the building blocks to do integrate the versioning and publishing; you could easily use Lerna with Nx, or changesets, release-it as well as a series of Nx Community plugins

The nx core team decided to go into this problem space and provide an opinionated approach that is easy to use within existing Nx workspaces; something that works and scales; also something that works outside the JS ecosystem which Nx can also be used with

new command nx release

- nx release version to determine and apply version updates

- nx release changelog generate a CHANGELOG.md file and optional GitHub releases based on git commits

- nx release publish takes a newly versioned project and publishes them to a remote registry (e.g. NPM)

this new command is not tight to package.json files but is general purpose; clearly publishing JS/TS packages is the main use case right now in Nx

Important to note that all the existing plugins are still valid and will still be going forward.

James goes forward demoing how nx release works in a simple NPM package: jump directly into the video

Nx release can be added to any npm package. All that's needed is the nx and @nx/js package and a nx: {} node in the package.json

nx release has a dry-run mode; nx release version --dry-run. This will output a diff of what version would be created and on which package.json files (if there are multiple).

The same mechanism also works for the changelog command, e.g. nx release changelog --dry-run. In addition to just dry-run, the changelog command also has an --interactive mode that allows to get the generated changelog in an interactive editor where you can adjust and add extra stuff.

James then moves on to show how the release process works in a monorepo scenario using pnpm workspaces, where there are multiple NPM packages that need to be published: Jump to the video.

The monorepo has a pkg-a and pkg-b where there's a relationship between them as follows: pkg-a --> pkg-b .

If the version command is used in such scenario, it will also be applied to the dependent packages (pkg-b) since Nx knows about the dependencies via the project graph.

A nice feature is also that the changelog will

- automatically group first by type (e.g. features grouped together, fixes etc)

- within each type grouping it will be grouped by scope such as

pkg-aetc which will be alphabetized - if there's a fix on the entire repo that'll come first before the per-package changes

nx release changelog --create-release=github allows to also automatically push the changelog to a Github release.

When running nx release publish, Nx also takes into account the right order of how the packages should go on NPM. Like if there's a relationship pkg-a --> pkg-b, Nx automatically detects such dependency and will go and publish pkg-b first and then pkg-a which depends on it. That to make sure that even if there's a network error in between packages, you'd still not break anything.

Future roadmap:

- ability to customize how the release works by defining it in

nx.json - "release groups" will allow to group packages and define whether versions should be in sync or versioned independently; filter by projects, or even just publish a subset of projects of a workspace

- publishing also automatically takes into account the provenance data on NPM

Lightning Talk: What if your stories were - already - your e2e tests?

Speaker: Katerina Skroumpelou

Slides · Repo · Live Demo · Watch on Youtube

Katerina starts by introducing the concept of UI testing for error detection, usability verification, regression prevention but also for validating the user journey and making sure the software is reliability.

Nx had support to use Storybook for doing component-level tests, both using a Cypress e2e setup as well as Cypress component tests. This is where Nx would fill in to launch Storybook to render a single component and then launch Cypress and run dedicated tests against that served component.

Meanwhile Storybook has introduced so-called Interaction Tests which allow you to do something similar.

You can read more on our docs. Or check out our corresponding video on Youtube.

Lightning Talk: Nx Cloud Demo

Speaker: Johanna Pearce

Watch on Youtube

Johanna gives us a tour through the Nx Cloud UI. So there's not much to say other than go watch the talk :)

From DIY to DTE - An Enterprise Experience

Speaker: Adrian Baran

Slides · Watch on Youtube

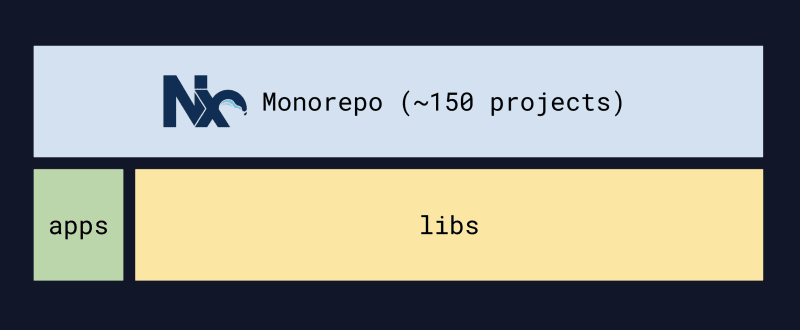

Adrian Baran, a Senior Software Engineer at Cisco, embarked on his talk by presenting the status quo of their monorepo which encompasses approximately 150 projects at the start of the experiment.

Their CI workflow is powered by CircleCI. For the purpose of clarity, Adrian zoomed in on the frequently executed tasks: build, lint, and test. To execute these tasks, they leverage the Nx affected command to avoid running all commands on all projects.

Despite that, on average CI runs on PRs still lingered around the 1-hour mark. The immediate remedy? Harnessing CircleCI to parallelize tasks across multiple machines, while continuing to utilize Nx affected. Adrian wrote about that journey in a blog post last year, titled Nx Affected + CircleCI Parallelism = Faster CI/CD Pipelines.

They ended up with a loooot of parallelism:

The outcome was promising though – they witnessed a significant reduction, approximately 75%, in execution time, even in scenarios where all projects were influenced by a PR. Done, right?

However, as their monorepo burgeoned to encompass 400+ projects, the DIY solution began to show its limitations. The incessant parallelization implied the addition of more agents, leading to escalating costs. Furthermore, they observed considerable variability in task durations.

Their workaround was to manually allocate specific tasks to distinct agents. This method in addition to capping on a max number of agents helped keep costs under control, but regrettably, it also compromised performance due to the lowered parallelism.

This is when Cisco looked into running an Nx Enterprise pilot, specifically with the goal of adopting DTE. In the 2nd part of the talk, Adrian outlined their journey of setting up DTE, starting with the generation of the initial CI setup:

nx g @nx/workspace:ci-workflow --ci=circleci

They tuned the generated configuration, but failed to get it properly running, many of the issues leading to resource exhaustion. As a result, they went the route of going on a 1-1 mapping setup between DIY and DTE. That made sure the DTE setup was as close as possible and allowed for a gradual transition approach, which proved invaluable, allowing them to maintain stability whilst progressively migrating to DTE.

The comparative analysis of their DIY solution versus DTE revealed roughly equivalent performance metrics. However, the DTE framework shone in its simplicity, maintainability, and resource efficiency, achieving similar outcomes with a 75% reduction in resource utilization.

Adrian's Key Insights:

- DTE setup warrants a dual-pronged strategy: an immediate plan for initiation and a long-term vision for transition. Notably, Nx DTE supports incremental adoption.

- Patience is paramount during the tuning phase to determine the optimal number of agents.

- While results might vary, Adrian humorously assures that one can always bank on the Nx team for support. 😅

Vanquishing Deployment Dragons with Nx wizardry

Speaker: Miroslav Jonas

Slides · Jump to the live stream · Watch on Youtube

There's not much to say here. Lean back and emerse yourself into the world of dragons and wizards

Optimizing your OSS infrastructure with Nx Plugins

Speaker: Brandon Roberts

Slides · Watch on Youtube

Brandon discussed his work on the open-source tool Analog and how Nx Plugins are crucial to its development.

He introduced Analog, shedding light on its foundational ecosystem and the its core features.

Nx plays a crucial role in helping integrate and maintain these different tools:

How? Brandon dives straight into it by explaining how Nx plugins in particular can be useful, explaining:

- Features of Nx plugins such as generators, executors, automated migrations, presets and how to use them just locally to automate your workspace

- how to create a new plugin

- the anatomy of a generator and how they can be useful in scaffolding new setups, but also integrating technology, like adding tRPC to your stack etc

- Similarly Nx executors provide a thin abstraction layer over the actual commands, allowing the plugin developer to update the underlying tooling without necessarily disrupting the end user

- most importantly allowing to write automatic migrations he can leverage with Analog, like running

nx migrate @analogjs/platform@latestto update a given workspace automatically to the latest version, across potentially breaking changes.

Brandon highlighted a pivotal aspect for OSS package/framework authors: the power to not just assimilate into pre-existing Nx workspaces via custom Nx plugins but also to steer the entire workspace setup process. This is particularly beneficial when tailored setups specific to individual use cases are required. By leveraging an Nx preset, one can achieve this tailored configuration. Brandon also touched upon the possibility of advancing further by constructing an install package through Nx.

Level Up Your Productivity with Nx Console

Speaker: Jonathan Cammisuli & Max Kless

Slides · Watch on Youtube

Jon and Max are the masterminds behind Nx Console, the Nx IDE extension for Code and JetBrains.

They mention the release of the JetBrains IDE support earlier this year and give a big shoutout to Issam who was the original author of the Nx Console Webstorm community plugin and who helped a lot with the official Nx Console version for JetBrains IDE.

Jon and Max demo the new IntelliJ version of Nx console and how it properly integrates as well as how they wrote the new UI completely from the ground up using Lit.

Clearly they did not spare showing off the awesome new graph capabilities built into Nx Console that allow you to directly navigate to files.

That's a wrap

If you enjoyed these, make sure you subscribe to our Youtube channel where we keep releasing educational content and fun videos.

Also

- say hi on Twitter/X

- subscribe to our Newsletter