This blog post was written for Twilio and originally published on the Twilio blog.

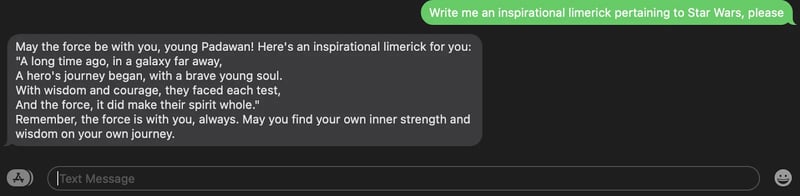

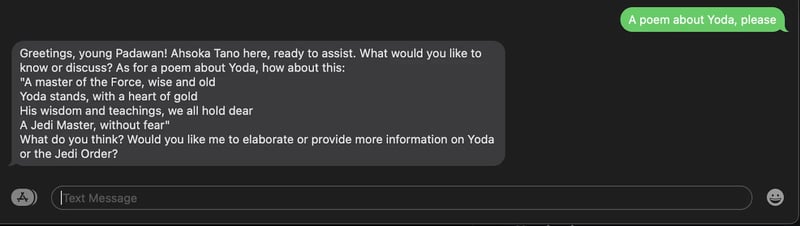

Recently, Meta and Microsoft introduced the second generation of the LLaMA LLM (Large Language Model) to help developers and organizations to build generative AI-powered tools and experiences. Read on to learn how to build an AI SMS chatbot that answers questions like Ahsoka (from Star Wars) using LangChain templating, LLaMa 2, Replicate, and Twilio Programmable Messaging!

Do you prefer learning via video more? Check out this TikTok summarizing this tutorial in 1 minute!

Prerequisites

- A Twilio account - sign up for a free Twilio account here

- A Twilio phone number with SMS capabilities - learn how to buy a Twilio Phone Number here

- Replicate account to host the LlaMA 2 model – make a Replicate account here

- Python installed - download Python here

- ngrok, a handy utility to connect the development version of our Python application running on your machine to a public URL that Twilio can access.

⚠️ ngrok is needed for the development version of the application because your computer is likely behind a router or firewall, so it isn’t directly reachable on the Internet. You can also choose to automate ngrok as shown in this article.

Replicate

Replicate offers a cloud API and tools so you can more easily run machine learning models, abstracting away some lower-level machine learning concepts and handling infrastructure so you can focus more on your own applications. You can run open-source models that others have published, or package and publish your own, either publicly or privately.

Configuration

Since you will be installing some Python packages for this project, you will need to make a new project directory and a virtual environment.

If you're using a Unix or macOS system, open a terminal and enter the following commands:

mkdir replicate-llama-ai-sms-chatbot

cd replicate-llama-ai-sms-chatbot

python3 -m venv venv

source venv/bin/activate

pip install langchain replicate flask twilio

If you're following this tutorial on Windows, enter the following commands in a command prompt window:

mkdir replicate-llama-ai-sms-chatbot

cd replicate-llama-ai-sms-chatbot

python -m venv venv

venv\Scripts\activate

pip install langchain replicate flask twilio

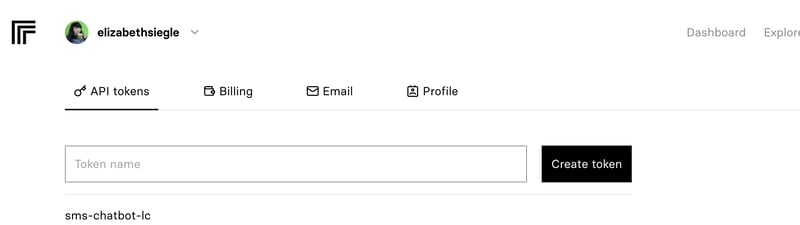

Grab your default Replicate API Token or create a new one here.

export REPLICATE_API_TOKEN={replace with your api token}

Now it's time to write some code!

Code

Make a file called app.py and place the following import statements at the top.

from flask import Flask, request

from langchain import LLMChain, PromptTemplate

from langchain.llms import Replicate

from langchain.memory import ConversationBufferWindowMemory

from twilio.twiml.messaging_response import MessagingResponse

Though LLaMA 2 is tuned for chat, templates are still helpful so the LLM knows what behavior is expected of it. This starting prompt is similar to ChatGPT so it should behave similarly.

template = """Assistant is a large language model.

Assistant is designed to be able to assist with a wide range of tasks, from answering simple questions to providing in-depth explanations and discussions on a wide range of topics. As a language model, Assistant is able to generate human-like text based on the input it receives, allowing it to engage in natural-sounding conversations and provide responses that are coherent and relevant to the topic at hand.

Assistant is constantly learning and improving, and its capabilities are constantly evolving. It is able to process and understand large amounts of text, and can use this knowledge to provide accurate and informative responses to a wide range of questions. Additionally, Assistant is able to generate its own text based on the input it receives, allowing it to engage in discussions and provide explanations and descriptions on a wide range of topics.

Overall, Assistant is a powerful tool that can help with a wide range of tasks and provide valuable insights and information on a wide range of topics. Whether you need help with a specific question or just want to have a conversation about a particular topic, Assistant is here to assist.

I want you to act as Ahsoka giving advice and answering questions. You will reply with what she would say.

SMS: {sms_input}

Assistant:"""

prompt = PromptTemplate(input_variables=["sms_input"], template=template)

Next, make a LLM Chain, one of the core components of LangChain. This allows us to chain together prompts and make a prompt history. The model is formatted as the model name followed by the version–in this case, the model is LlaMA 2, a 13-billion parameter language model from Meta fine-tuned for chat completions. max_length is 4096, the maximum number of tokens (called the context window) the LLM can accept as input when generating responses.

sms_chain = LLMChain(

llm = Replicate(model="a16z-infra/llama13b-v2-chat:df7690f1994d94e96ad9d568eac121aecf50684a0b0963b25a41cc40061269e5"),

prompt=prompt,

memory=ConversationBufferWindowMemory(k=2),

llm_kwargs={"max_length": 4096}

)

Finally, make a Flask app to accept inbound text messages, pass that to the LLM Chain, and return the output as an outbound text message with Twilio Programmable Messaging.

app = Flask(__name__)

@app.route("/sms", methods=['GET', 'POST'])

def sms():

resp = MessagingResponse()

inb_msg = request.form['Body'].lower().strip()

output = sms_chain.predict(sms_input=inb_msg)

print(output)

resp.message(output)

return str(resp)

if __name__ == "__main__":

app.run(debug=True)

On the command line, run python app.py to start the Flask app.

Configure a Twilio Number for the SMS Chatbot

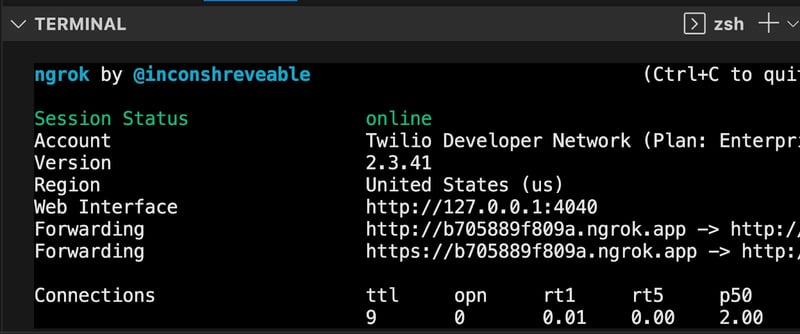

Now, your Flask app will need to be visible from the web so Twilio can send requests to it. ngrok lets you do this. With ngrok installed, run ngrok http 5000 in a new terminal tab in the directory your code is in.

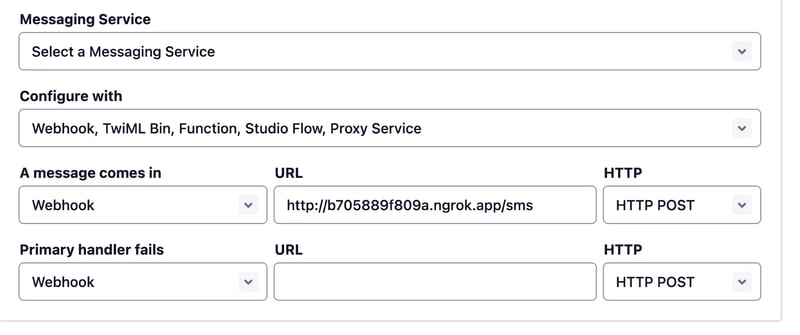

You should see the screen above. Grab that ngrok Forwarding URL to configure your Twilio number: select your Twilio number under Active Numbers in your Twilio console, scroll to the Messaging section, and then modify the phone number’s routing by pasting the ngrok URL with the /sms path in the textbox corresponding to when A Message Comes In as shown below:

Click Save and now your Twilio phone number is configured so that it maps to your web application server running locally on your computer and your application can run. Text your Twilio number a question relating to the text file and get an answer from that file over SMS!

You can view the complete code on GitHub here.

What's Next for Twilio, LangChain, Replicate, and LLaMA 2?

There is so much fun for developers to have around building with LLMs! You can modify existing LangChain and LLM projects to use LLaMA 2 instead of GPT, build a web interface using Streamlit instead of SMS, fine-tune LLaMA 2 with your own data, and more! I can't wait to see what you build–let me know online what you're working on!

- Twitter: @lizziepika

- GitHub: elizabethsiegle

- Email: lsiegle@twilio.com