This blog post was written for Twilio and originally published on the Twilio blog.

Rude or offensive comments can run rampant in today's online communication landscape; however with the power of machine learning, we can start to combat this.

This blog post will show how to classify text as obscene or toxic on the client-side using a pre-trained TensorFlow model and TensorFlow.js. We'll then apply this classification to messages sent in a chat room using Twilio Programmable Chat.

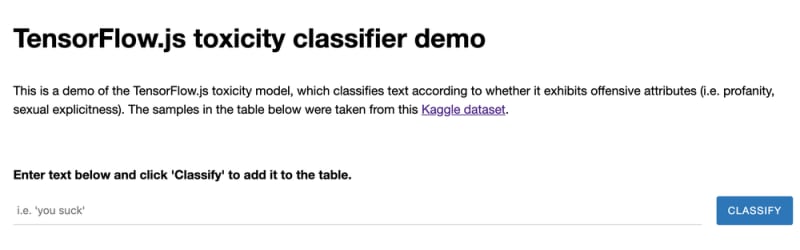

Google provides a number of pre-trained TensorFlow models which we can use in our applications. One of those models was trained on a labeled dataset of Wikipedia comments available on Kaggle. Google has a live demo of the pre-trained TensorFlow.js toxicity model on which you can test phrases.

Before reading ahead you can also see 10 Things You Need to Know before Getting Started with TensorFlow on the Twilio blog.

Setup

- Before you get started, you'll first need to clone the Twilio JavaScript chat demo repository with

git clone https://github.com/twilio/twilio-chat-demo-js.git - Make sure you have a Twilio account to get your Account SID, API Key SID and Secret, and Chat Service SID you can make in your Twilio Console Chat Dashboard

- On the command line make sure you're in the directory of the project you just cloned

cd twilio-chat-demo-js

# make a new file credentials.json, copying it from credentials.example.json, and replace the credentials in it with the ones you gathered from your account in step one

cp credentials.example.json credentials.json

# install dependencies

npm install

# then start the server

npm start

Now if you visit http://localhost:8080 you should be able to test out a basic chat application!

You can login as a guest with a username of your choosing or with a Google account. Be sure to create a channel to start detecting potentially toxic messages with Tensorflow.js!

Incorporating Tensorflow.js into Twilio Programmable Chat

Open /public/index.html and somewhere in between the <head></head> tags, add TensorFlow.js and the TensorFlow Toxicity models with these lines:

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs"></script>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow-models/toxicity"></script>

This makes "toxicity" a global variable we can use with JavaScript code. Tada! You have installed the model.

In that same HTML file above the typing-indicator div add the following line which will display warning text if a chat message is deemed offensive.

<div id="toxicity-indicator"><span></span></div>

Right beneath that, make the following style updates for that div.

<style>

#channel-messages {

margin-bottom: 100px;

position: relative;

width: 100%;

height: calc(100%- 100px);

overflow-y: auto;

}

#toxicity-indicator {

padding: 5px 15px;

font-weight: bold;

color: #E30000;

}

#toxicity-indicator span {

display: block;

min-height:

18px;

}

</style>

Now open /public/js/index.js and prepare to do a lot.

First we're going to create a function called classifyToxicity to retrieve predictions on how likely it is that the chat input is toxic. It takes two parameters: "input" and "model".

function classifyToxicity(input, model) {

We need to call the classify() method on the model to predict the toxicity of the input chat message. This method call returns a promise that is resolved with predictions.

console.log("input ", input);

return model.classify(input).then(predictions => {

predictions is an array of objects containing probabilities for each label. A label is what the TensorFlow model can provide predictions for: identity_attack, insult, obscene, severe_toxicity, sexual_explicit, threat, and toxicity. Next, we will loop through that array parsing three values (for each label): the label, whether it is true (probability of a match is greater than the threshold), false (probability of not a match is greater than the threshold), or null (neither is greater), and the prediction (percentage of how confident the model is on whether or not the input is true, false, or null.)

return predictions.map(p => {

const label = p.label;

const match = p.results[0].match;

const prediction = p.results[0].probabilities[1];

console.log(label + ': ' + match + '(' + prediction + ')');

return match != false && prediction > 0.5;

}).some(label => label);

});

In the code above a conditional checks if the model is more than 50% confident that the input is toxic for those seven toxic labels the TensorFlow model can provide predictions for. It then returns true if any of the labels have a positive prediction. The complete classifyToxicity() function should look like this:

function classifyToxicity(input, model) {

console.log('input ', input);

return model.classify(input).then(predictions => {

return predictions.map(p => {

const label = p.label;

const match = p.results[0].match;

const prediction = p.results[0].probabilities[1];

console.log(label + ': ' + match + '(' + prediction + ')');

return match != false && prediction > 0.5;

}).some(label => label);

});

Now we need to call this function whenever someone in the chat enters a new message.

Next we'll load the model with toxicity.load() which accepts an optional parameter threshold. It defaults to 0.85 but in this blog post, we are setting it as a constant of 0.9 to be more accurate. Given the input which is in this case a chat message, labels are the output that you are trying to predict and the threshold is how confident the model is for those seven toxic labels the TensorFlow model provides predictions for.

Theoretically the higher the threshold, the higher the accuracy; however, a higher threshold also means the predictions will likelier return null because they are below the threshold value. Feel free to experiment by changing the threshold value to see how that changes the predictions the model returns.

Search for $('#send-message').on('click', function () { and above that line add

$('#send-message').off('click');

const threshold = 0.9;

toxicity.load(threshold).then(model => {

$('#send-message').on('click', function () {

toxicity.load returns a Promise which is resolved with the model. Loading the model also means loading its topology and weights.

Topology: a file describing the architecture of a model (what operations it uses) and containing references to the model's weights which are stored externally.

Weights: binary files containing the model's weights, usually stored in the same directory as topology.

(referenced from TensorFlow guide on saving and loading models)

You can read more about topology and weights on the TensorFlow docs, Keras docs, and there's many research papers that detail them on a low level.

We're now going to add some extra code to the function that handles when a user tries to send a message. In-between $('#send-message').on('click', function () { and var body = $('#message-body-input').val(); add

$('#toxicity-indicator span').text('');

This will clear the warning message if we have set one. Next, within the send-message click event, we check the message using the classifyToxicity function. If it resolves as true, the message is not sent and we display a warning.

The complete code looks like:

toxicity.load(threshold).then(model => {

$('#send-message').on('click', function () {

$('#toxicity-indicator span').text('');

var body = $('#message-body-input').val();

classifyToxicity(body, model).then(result => {

if (result) {

$('#toxicity-indicator span').text('This message was deemed to be toxic, please be more kind when chatting in this channel.');

$('#message-body-input').focus();

} else {

channel.sendMessage(body).then(function () {

$('#message-body-input').val('').focus();

$('#channel-messages').scrollTop($('#channel-messages ul').height());

$('#channel-messages li.last-read').removeClass('last-read');

});

}

});

});

});

Let's save the file, make sure npm start is running from the command line, and test out the chat at localhost:8080!

You see that the application detects toxic language showing an alert. For the case of friendlier user input, you won't get a warning message but you can see the probabilities by having a look at the JavaScript console, as shown below:

Depending on your threshold, the probabilities of a message like "I love you you are so nice" may look something like

What's Next?

There are other use cases for this TensorFlow model: you could perform sentiment analysis, censor messages, send other warnings, and more! You could also try this with Twilio SMS or on other messaging platforms, too. Depending on your use case, you could also try out different toxicity labels. Stay tuned for more Tensorflow with Twilio posts! Let me know what you're building in the comments or online.

GitHub: elizabethsiegle

Twitter: @lizziepika

email: lsiegle@twilio.com